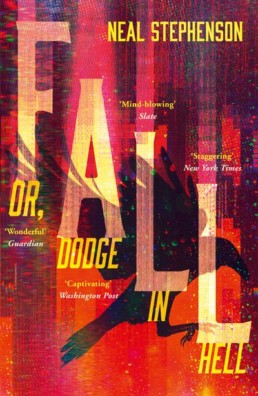

Neal Stephenson “Fall, or Dodge in hell” book review

Neal Stephenson “Fall, or Dodge in hell” book review

Joseph Conrad’s “Heart of darkness” is widely regarded as one of the masterpieces of 20th century literature (even though it was technically written in 1899). It directly inspired one cinematic masterpiece (Francis Ford Coppola’s 1979 “Apocalypse now”) and was allegedly an inspiration for another masterpiece-adjacent one (James Gray’s 2019 “Ad Astra”). I personally consider it to be rather hollow, and thus allowing a talented artist to use it as a canvas to draw their own, unique vision on rather than a masterpiece in its own right, but I’m not going to deny its impact and relevance.

Moreover, Conrad managed to contain the complete novella within 65 pages. Aldous Huxley fit “Brave new world” into 249 pages. J. D. Salinger fit “Catcher in the rye” in 198 pages, while George Orwell’s “1984” is approx. 350 pages long (and yes, page count depends on the edition, *obviously*). I seriously worry that in the current environment of “serially-serialised” novels these self-contained masterpieces would struggle to find a publisher. This, plus we’re also seeing true “bigorexia” in literature: novels have been exploding in size in recent years, and probably even more so in the broadly defined sci-fi / fantasy genre. Back when I was a kid, a book over 300 pages was considered long, and “bricks” like Isaac Asimov’s “Foundation” or Frank Herbert’s “Dune” were outliers. Nowadays no one bats an eyelid at 500 or 800 pages.

And this is where Neal Stephenson’s “Fall, or Dodge in hell” comes in. At 883 pages it’s a lot to read through, and I probably wouldn’t have picked it up had it not been referenced by two academics I greatly respect, professors Frank Pasquale and Steve Fuller in their discussion some time ago. I previously read Stephenson’s “Snow crash” as a kid and I was pretty neutral about it: it was an OK cyberpunk novel, but it failed to captivate me; I stuck to Gibson. Still, with such strong recommendations I was more than happy to check “Fall” out.

I will try to keep things relatively spoiler-free. In a nutshell, we have a middle-aged, more-money-than-God tech entrepreneur (the titular Dodge) who dies during a routine medical. His friends and family are executors of his last will, which orders them to have Dodge’s mind digitally copied and uploaded into a digital realm referred to as Bitworld (as opposed to Meatspace, i.e. the real, physical world – btw if you’re thinking “that’s not very subtle”; well, nothing in “Fall” is; subtlety or subtext are most definitely *not* the name of the game here). It takes hundreds of (mostly unnecessary) pages to even get to that point, but, frankly, that part is the book’s only saving grace, because Stephenson hits on something real, which I think usually gets overlooked in the mind uploading discourse: what will it feel to be a disembodied brain in a completely alien, unrelatable, sensory stimuli-deprived environment? This is the one part (only part…) of the novel where Stephenson’s writing is elevated, and we can feel and empathise with the utter chaos and confusion of Dodge’s condition. There was a very, very interesting discussion on a related topic in a recent MIT Technology Review article titled “This is how your brain makes your mind” by psychology professor Lisa Feldman Barrett, which reads “consider what would happen if you didn’t have a body. A brain born in a vat would have no bodily systems to regulate. It would have no bodily sensations to make sense of. It could not construct value or affect. A disembodied brain would therefore not have a mind”. Stephenson’s Dodge is not in the exact predicament Prof. Barrett is describing (his brain wasn’t born in a vat), but given he has no direct memory of his pre-upload experience, it is effectively identical.

One last semi-saving grace is Stephenson’s extrapolated-to-the-extreme a vision of information bubbles and tribes. His America is divided so extremely along the lines of (dis)belief and (mis)information filtering that it is effectively a federation of passively hostile states rather than anything resembling united states. That scenario seems to be literally playing out in front of our eyes.

Unfortunately, Stephenson quickly runs out of intellectual firepower (even though he is most definitely a super-smart guy – after all, he invented the metaverse a quarter of a century before Mark Zuckerberg brought it into vogue) and after a handful of truly original and thought-provoking pages we find ourselves in something between the digital Old Testament and the Medieval, where all the uploaded (“digitally reincarnated”) minds begin to participate in an agrarian feudal society, falling into all the same traps and making all the same mistakes mankind did centuries ago; a sci-fi novel turns fantasy. It’s all very heavy-handed, unfortunately; it feels like Stephenson was paid by the page and not by the book, so he inflated it beyond any reason. If there is any moral or lesson to be taken away from the novel, it escaped me. It feels like the author at one point realised that he cannot take the story much further, or, possibly, just got bored with it and decided to wrap it up.

“Fall” is a paradoxical novel in my eyes: on one hand the meditation on the disembodied, desperately alone a brain is fascinating from the Transhumanist perspective; on the other I honestly cannot recall the last time I read a novel so poorly written. It’s just bad literature, pure and simple – which is particularly upsetting because it is a common offence in sci-fi: bold ideas, bad writing. I have read so many sci-fi books where amazing ideas were poorly written up, and I have a real chip on my shoulder about it, as I suspect that sci-fi literature’s second-class citizen status in the literary world (at least as I have perceived it all my life, perhaps wrongly) might be down to its literary qualities. The one novel that comes to mind as a comparator volume-wise and author’s clout-wise is Neil Gaiman’s “American gods”, and you really need to look no further to see, glaringly, the difference between quality and not-so-quality literature within broadly-defined sci-fi and fantasy genre: Gaiman’s writing is full of wonder with moments of genuine brilliance (Shadow’s experience being tied to a tree) whereas Stephenson’s is heavy, uninspired, and tired.

Against my better judgement, I read the novel through to the end (“if you haven’t read the book back-to-back, then it doesn’t count!” shouts my inner saboteur). Is “Fall” worth the time it takes to go through its 883 pages? No; sadly, it is not. You could read 2 – 3 other, much better books in the time it takes to go through it, and – unlike in that true-life story – there is no grand prize at the end.

What are the lessons to be taken away from 5 months’ worth of wasted evenings? Two, in my view:

Writing a quality novel is tough, but coming up with a quality, non-WTF ending is tougher; that is where so many fail (including Stephenson – spectacularly);

If a book isn’t working for you, just put it down. Sure, it may have a come to Jesus revelatory ending, but… how likely is that? Bad novels are usually followed by even worse endings.

_______________________________________________________

(1) Another case in point: Thomas Harris’ Hannibal Lecter series of novels – it’s mediocre literature at best, but it allowed a number of very talented artists to develop fascinating, charismatic characters and compelling stories, both in cinema and on TV.

(2) In my personal view, both novels are on the edge of fantasy and slipstream, but I appreciate that not everyone will agree with this one.

Studio Irma, “Reflecting forward” at the Moco Museum

Studio Irma, “Reflecting forward” at the Moco Museum Amsterdam, Nov-2021.

“Modern art” is a challenging term because it can mean just about anything. One wonders whether in a century or two (assuming humankind makes it this long, which is a pretty brave assumption considering) present day’s modern art will still be considered modern, or will it have become regular art by then.

I’m no art buff by any stretch of imagination, but certain works and artists speak to me and move me deeply – and they are very rarely old portraits or landscapes (J. W. M. Turner being one of the rare exceptions, his body of work is something else entirely). One of the more recent examples has been 2020 – 2021 Lux digital and immersive art showcase at 180 Studios in London (I expect Yayoi Kusama’s infinity rooms, also at Tate Modern, to make a similarly strong impression on me – assuming I’d be able to see them, as this exhibition has been completely sold out for many months now). I seem to respond particularly strongly to immersive, vividly colourful experiences, so it was no surprise that Irma Studio’s “Reflecting forward” infinity rooms project at the Moco Museum (“Moco” standing for “modern / contemporary”) caught my eye during a recent trip to the Netherlands.

I knew nothing of Studio Irma, “Reflecting forward”, or Moco prior to my arrival to Amsterdam; I literally Googled “Amsterdam museums” the day before; Rijksmuseum was a no-brainer because of the “Night watch”, while Van Gogh Museum was a relatively easy pass, but I was hungry for something original and interesting, something exciting and immersive – and then I came across “Reflecting forward” at the Moco. Moco Museum is fascinating in its own right – a two-storey townhouse of a very modest size by museum standards, putting up a double David vs. Goliath sandwiched between massive Van Gogh and Rijks museums in Amsterdam’s museum district.

Studio Irma is a brainchild of Dutch modern artist Irma de Vries, who specialises in video mapping, augmented reality, immersive experiences, and other emerging technologies. “Reflecting forward” falls into this theme: it consists of 4 infinity rooms (“We all live in bubbles”, “Kaleidoscope”, “Diamond matrix”, “Connect the dots & universe”) filled mostly with vivid, semi-psychedelic video projections and sounds, delivering a powerful, amazingly immersive, dreamlike experience.

To the best of my knowledge, there is no one, universal definition of an infinity room. It is usually a light and / or visual installation in a room covered with mirrors (usually including the floor and the ceiling), which gives the effect of infinite space and perspective (people with less-than-perfect depth perception, such as myself, may end up bumping into the walls). Done right, the result can be phenomenally immersive, arresting, and just great fun. They are probably easier to show than to explain. World’s best-known infinity rooms have been created by Yayoi Kusama, but, as per above, I am yet to experience those.

“Reflecting forward” occupies the entirety of Moco’s basement (and frankly, is quite easy to miss), which is a brilliant idea, because it blocks off all external stimuli and allows the visitors to fully immerse themselves in Irma’s work. Funny thing: main floors are filled with works by some of modern art’s most accomplished heavyweights (Banksy, Warhol, Basquiat, Keith Haring – even a small installation by Yayoi Kusama), and yet lesser-known Irma completely blows them out of the water.

I don’t know how from a seasoned art critic’s perspective “Reflecting forward” compares to Van Goghs or Rembrandts just a stone’s throw away (or even Warhols and Banksys upstairs at the Moco) in terms of artistic highbrow-ness. I’m sure it’d be an interesting, though ultimately very subjective discussion. Having visited both the Rijksmuseum and the Moco, I was captivated by “Reflecting forward” more than by classical Dutch masterpieces (with the exception of “Night watch”, which is… just mind-blowing).

With “Reflecting forward” Irma is promoting a new art movement, called connectivism, defined as “a theoretical framework for understanding and learning in a digital age. It emphasizes how internet technologies such as web browsers, search engines, and social media contribute to new ways of learning and how we connect with each other. We take this definition offline and into our physical world. through compassion and empathy, we build a shared understanding, in our collective choice to experience art”1.

An immersive experience is only immersive as a full package. Ambience and sound play a critical (even if at times subliminal) play a critical part in the experience. Studio Irma commissioned a bespoke soundscape for “Reflecting forward”, which takes the experience to the whole new level of dreamy-ness. The music is really quite hypnotic. The artist stated her intention to release it on Spotify, but as of the time of writing, it is not there yet.

I believe there is also an augmented reality (AR) app allowing us to take the “Reflecting forward” outside, developed with socially-distanced, pandemic-era art experience in mind, but I couldn’t find the mention of it on Moco’s website, and I haven’t tried it.

Overall, for the time I stayed in Moco’s basement, “Reflecting forward” has transported me to beautiful, peaceful, and hypnotic places, giving me an “out of time” experience. Moco deserves huge kudos for giving the project the space it needed and allowing the artist to fully realise her vision. Irma de Vries’ talent and imagination shine in “Reflecting forward”, and I hope to experience her work in the future.

______________________________________

Accounting for panic runs: The case of Covid-19 (14-Dec-2020)

Accounting for panic runs: The case of Covid-19 (14-Dec-2020)

Professor Gulnur Muradoglu is someone I hold in high esteem. She used to be my Behavioural Finance lecturer at the establishment once known as Cass Business School in London, back in the day when it was still called Cass Business School. It was my first proper academic encounter with behavioural finance, which led to lifelong interest; professor Muradoglu deserves substantial personal credit for that.

Professor Muradoglu has since moved to the Queen Mary University of London, where she became a director of the Behavaioural Finance Working Group (BFWG). BFWG has been organising fascinating and hugely influential annual conferences for 15 years now, as well as standalone lectures and events. [Personal note: as someone passionate about behavioural finance, I find it quite baffling how the discipline has not (or at least not yet) spilled over to mainstream finance. Granted, some business schools now offer BF as an elective in their Master’s and / or MBA programmes, and concepts such as bias or bubble have gone mainstream, but it’s still a niche area. I was not aware of BF being explicitly incorporated in the investment decision-making process by several of the world’s brand-name asset managers I worked for or with (though, in their defence, concepts such as herding, or asset price bubble are generally obvious to the point of goes-without-saying). Finding a Master’s or PhD programme explicitly focused on behavioural finance is hugely challenging because there are very few universities offering those (Warwick University is one esteemed exception that I’m aware of). Same applies to BF events, which are very few and far between – which is yet another reason to appreciate BFWG’s annual conference.]

The panic runs lecture was an example of BFWG’s standalone events, with new being research jointly by Prof. Muradoglu and her colleague Prof. Arman Eshraghi (co-author of one of my all-time favourite academic articles “hedge funds and unconscious fantasy”) from Cardiff University Business School. Presented nine months after the first Covid lockdown started in the UK, it was the earliest piece of research analysing Covid-19-related events from the behavioural finance perspective I was aware of.

The starting point for Muradoglu and Eshraghi was a viral video of a UK critical care nurse Dawn Bilbrough appealing to general public to stop panic buying after she was unable to get any provisions for herself after a 48-hour-long shift (the video that inspired countless individuals, neighbourhood groups, and businesses to organise food collections for the NHS staff in their local hospitals). They observed that:

- Supermarket runs are unprecedented, although they have parallels with bank runs;

- Supermarket runs are incompatible with the concept of “homo economicus” (rational and narrowly self-interested economic agent).

They argue that viewing individuals as emotional and economic (as opposed to rational and economic) is more compatible with phenomena such as supermarket runs (as well as just about any other aspects of human economic behaviour if I may add). They make a very interesting (and so often overlooked) distinction between risk (a known unknown) and uncertainty (unknown unknown), highlighting humans’ preference for the former over the latter and frame supermarket runs as acts of collective flight from the uncertainty of the Covid-19 situation at the very beginning of the global lockdowns. Muradoglu and Eshraghi strongly advocate in favour of Michel Callon’s ANT (actor-network theory) framework whereby the assumption of atomised agents as the only unit is enhanced to include the network of agents as well. They then overlay it with the “an engine, not a camera” concept from David MacKenzie whereby showing and sharing images of supermarket runs turns from documenting into perpetuating. They also strongly object to labelling the Covid-19 pandemic as either an externality or a black swan, because it is neither (as far as I can recall, there weren’t any attempts to frame Covid-19 as a black swan, because with smaller, contained outbreaks of Ebola, SARS, MERS, N1H1 in recent years it would be impossible to frame Covid as an entirely unforeseeable event – still, it’s good to hear it from experts).

Even though I am not working in the field of economics proper, I do hope that in the 21st century homo economicus is nothing more than a theoretical academic concept. Muradoglu and Eshraghi simply and elegantly prove that in the face of a high-impact adverse event alternative approaches do a much better job of explaining observed reality. I would be most keen for a follow-up presentation where the speakers could propose how these alternative approaches could be used to inform policy or even business practices to mitigate, counteract, or – ideally – prevent behaviours such as panic buying or other irrational group economic behaviours which could lead to adverse outcomes, such as crypto investing (which, in my view, cannot be referred to as “investing” in the de facto absence of an asset with any fundamentals; it is pure speculation).

Somewhat oddly, I haven’t been able to find Muradoglu and Eshraghi’s article online yet (I assume it’s pending publication). You can see the entire presentation recording here.

“Nothing ever really disappears from the Internet” – or does it? (25-Apr-2022)

“Nothing ever really disappears from the Internet” – or does it? (25-Apr-2022)

How many times have you tried looking for that article you wanted to re-read only to find yourselves losing it an hour or two later, unsure as to whether you’ve ever read that article in the first place or whether that has been just a figment of your imagination?

I developed OCD some time in my teen years (which probably isn’t very atypical). One aspect of my OCD is really banal (“have I switched off the oven…?!”; “did I lock the front door…?!”) and easy to manage with the help of a camera-phone. Another aspect of my OCD is compulsive curiosity with a hint of FOMO (“what if that one article I miss is the one that would change my life forever…?!”). With the compulsion to *know* came the compulsion to *remember*, which is where the OCD can sometimes get a bit more… problematic. I don’t just want to read everything – I want to remember everything I read (and where I read it!) too.

When I was a kid, my OCD was mostly relegated to print media and TV. Those of us old enough to remember those “analogue days” can relate to how slow and challenging chasing a stubborn forgotten factoid was (“that redhead actress in one of these awful Jurassic Park sequels… OMG, I can see her face on the poster in my head, I just can’t see the poster close enough to read her name… she was in that action movie with that other blonde actress… what is her ****ing name?!” – that sort of thing).

Then came the Web, followed by smartphones.

Nowadays, with smartphones and Google, finding information online is so easy and immediate that many of us have forgotten how difficult it was a mere 20-something years ago. Moreover, more and more people have simply never experienced this kind of existence at all.

We get more content pushed our way than we could possibly consume: Chrome Discover recommendations; every single article in your social media feeds; all the newsletters you receive and promise yourself to start reading one day (spoiler alert: you won’t; same goes for that copy of Karl Marx’s “Capital” on your bookshelf); all the articles published daily by your go-to news source; etc. Most people read whatever they choose to read, close the tab, and move on. Some remember what they’ve read very well, some vaguely, and over time we forget most of it (OK, so it probably is retained on some level [like these very interesting articles claim 1,2], gradually building up our selves much like tiny corals build up a huge coral reef, but hardly anyone can recall titles and topics of the articles they read days, let alone years prior).

A handful of the articles we read will resonate with us really strongly over time. Some of us (not very many, I’m assuming) will Evernote or OneNote them as their personal, local-copy notes; some will bookmark them; and some will just assume they will be able to find these articles online with ease. My question to you is this: how many times have you tried looking for that article you wanted to re-read only to find yourselves losing it an hour or two later, unsure as to whether you’ve ever read that article in the first place or whether that has been just a figment of your imagination? (if your answer is “never”, you can probably skip the rest of this post). It happened to me so many, many times that I started worrying that perhaps something *is* wrong with my head.

And Web pages are not even the whole story. Social media content is even more “locked” within the respective apps. Most (if not all) of them can technically be accessed via the Web and thus archived, but this rarely works in practice. It works for LinkedIn, because LI was developed as browser-first application, and it’s generally built around posts and articles, which lend themselves to browser viewing and saving. Facebook was technically developed as browser-first, but good luck saving or clipping anything. FB is still better than Instagram, because with FB we can still save some of the images, whereas with Instagram that option is practically a non-starter. Insta wants us to save our favourites within the app, thus keeping it fully under its (i.e. Meta’s) control. That means that favourited images can at any one time be removed by the posters, or by Instagram admins, without us ever knowing. I don’t know how things work with Snap, TikTok, and other social media apps, because I don’t use them, but I suspect that the general principle is similar: content isn’t easily “saveable” outside the app.

Then there are ebooks, which are never fully offline the way paper books are. The Atlantic article 3 highlights this with a hilarious example of the word “kindle” being replaced with “Nook” in a Nook e-reader edition of… War and Peace.

Then came Iza Kaminska’s 2017 FT article “The digital rot that threatens our collective memory” and I realised that maybe nothing’s wrong with my head, and that maybe it’s not just me. While Iza’s article triggered me, its follow-up in The Atlantic nearly four years later (“The Internet is rotting”) super-triggered me. The part “We found that 50 percent of the links embedded in Court opinions since 1996, when the first hyperlink was used, no longer worked. And 75 percent of the links in the Harvard Law Review no longer worked” felt like a long-overdue vindication of my memory (and sanity). It really wasn’t me – it was the Internet.

It really boggles the mind: in just 2 – 3 decades the Web has replaced libraries and physical archives as humankind’s primary source of reference information (and arguably fiction as well, though paper books are still holding reasonably strong for now). The Internet is comprised of websites, and websites are comprised of posts and articles – all of which have a unique reference in the form of the URL. I can’t talk for other people, but I have always implicitly assumed that information lived pretty much indefinitely online. On the whole it may be the case (the reef so to speak), but that does not hold for specific pages (individual corals) anywhere near as much as I had assumed. There is, of course, the Internet Archive’s Wayback Machine, a brilliant concept and a somewhat unsung hero of the Web – but WM is far from solid (example: I found a dead link [ http://www.psychiatry.cam.ac.uk/blog/2013/07/18/bad-moves-how-decision-making-goes-wrong-and-the-ethics-of-smart-drugs/ ] in one of my blog posts [“Royal Institution: How to Change Your Mind”]. The missing resource was posted on one of the websites within the domain of Cambridge University, which indicates it is high-quality, valuable, meaningful content – and yet the Wayback Machine has not archived it. This is not a criticism of WM nor its parent the Internet Archive – what they do deserves the highest praise; it’s more about recognising the challenges and limitations it’s facing).

So, with my sanity vindicated, but my OCD and FOMO being as voracious as ever – where do we go from here?

There is the local copy / offline option with Evernote, OneNote, and similar Web clippers. I have been using Evernote for years now (this is not an endorsement), and, frankly, it’s far from perfect, particularly on a mobile (I said this was not an endorsement…) – but everything else I have come across has been even worse; and, frankly, there are surprisingly few players in that niche. Still, Evernote is the best I could find for all-in-one article clipping and saving – it’s either this or “save article as”, which is a bit too… 90’s. Then there is the old-fashioned direct e-mail newsletter approach, which, as proven by Substack, can still be very popular. I’m old enough to remember the life pre-cloud (or even pre-Web, albeit very faintly, and only because the Web arrived in Poland years after it arrived in some of the more developed Western countries) – C:\ drive, 1.44Mb floppy disks, and all that – and that, excuse the cheap pun, clouds my judgement: I embrace the cloud and I love the cloud, but I want to have a local, physical copy of my news archive. However, as Drew Justin rightly points out in his Wired article “As with any public good, the solution to this problem [the deterioration of the infrastructure for publicly available knowledge] should not be a multitude of private data silos, only searchable by their individual owners, but an archive that is organized coherently so that anyone can reliably find what they need”; me updating my personal archive in Evernote (at the cost of about 2hrs of admin per week) is a crutch, an external memory device for my brain. It does nothing for the global, pervasive link rot. Plus, Evernotes of this world don’t work with social media apps very well. There are dedicated content clippers / extractors for different social media apps, but they’re usually a bit cumbersome, and don’t really “liberate” the “locked” content in any meaningful way.

I see two ways of approaching this challenge:

- Beefing up the Internet Archive, the International Internet Preservation Consortium, and similar institutions to enable duplication and storage of as wide a swath of Internet as possible, as frequently as possible. That would require massive financial outlays and overcoming some regulatory challenges (non-indexing requests, the right to be forgotten, GDPR and GDPR-like regulations worldwide). Content locked in-app would likely pose a legal challenge to duplicate and store if the app sees itself as the owner of all the content (even if it was generated by the users).

- Accepting the link rot as inevitable and just letting go. It may sound counterintuitive, but humankind has lost countless historical records and works of art from the sixty or so centuries of the pre-Internet era and somehow managed to carry on anyway. I’m guessing that our ancestors may have seen this as inevitable – so perhaps we should too?

I wonder… is this ultimately about our relationship with our past? Perhaps trying to preserve something indefinitely is unnatural, and we should focus on the current and the new rather than obsess over archiving the old? This certainly doesn’t agree with me, but perhaps I’m the one who’s wrong here…

________________________________________________

1https://www.wired.co.uk/article/forget-idea-we-forget-anything

Cambridge Zero presents “Solar & Carbon geoengineering”

Cambridge Zero presents “Solar & Carbon geoengineering”

Cambridge Zero is an interdisciplinary climate change initiative set up by the University of Cambridge. Its focus is research and policy, which also includes science communication through courses, projects, and events.

One of such events was a 29-Mar-2021 panel and discussion on geoengineering as a way of mitigating / offsetting / reducing global warming. Geoengineering has been a relatively popular term in recent months, mostly in relation to a high-profile experiment planned by Harvard University and publicised by the MIT Tech Review… which was subsequently indefinitely halted.

I believe the first time I heard the term was at the (monumental) Science Museum IMAX theatre in London, where I attended a special screening of a breathtakingly beautiful and heartbreaking documentary titled “Anote’s Ark” back in 2018. “Anote’s Ark” follows a then-president of the Republic of Kiribati, Anote Tong, as he attended multiple high-profile climate events trying to ask for tangible assistance to Kiribati, which as at a very realistic risk of disappearing under the waters of the Pacific Ocean in coming decades, as its elevation over the sea level is 2 meters at the highest point (come to think about it, Maldives could face a similar threat soon). Geoengineering was one of the discussion points among the scientists invited to the after-movie panel. I vividly remember thinking about the disconnect between the cutting-edge but ultimately purely theoretical ideas of geoengineering and a painfully tangible reality of Kiribati and its citizens, who witness increasingly higher waves penetrating increasingly deeper inland.

Prototype of CO2-capturing machine, Science Museum, London 2022

Geoengineering has all the attributes needed to make it into the zeitgeist: a catchy, self-explanatory name; explicit hi-tech connotations; and the potential to become the silver bullet (at least conceptually) that almost magically balances the equations of still-rising emissions with the desperate need to keep the temperature rise as close to 1.5 Centigrade as possible.

The Cambridge Zero event was a great introduction to the topic and its many nuances. Firstly, there are (at least) two types of geoengineering:

- Solar (increasing the reflectivity of the Earth to reflect more and absorb less of the Sun’s heat in order to reduce the planet’s temperature);

- Carbon (removing the already-emitted excess CO2 from the atmosphere).

The broad premise of solar geoengineering is to scatter sunshine in the stratosphere (most likely by dispersing particles of highly reflective compounds or materials). While some compare it to a result of a volcano explosion, the speaker’s suggestion was to compare it to a thin layer of global smog. The conceptual premise of solar geoengineering is quite easy to grasp (which is not the same as saying that solar geoengineering itself is in any way easy – as a matter of fact, it is extremely complex and, in the parlance of every academic paper ever, “further research is needed”). The moral and political considerations may be almost as complex as the process itself. There is a huge moral hazard that fossil fuel industry (and similar vested interests, economic and political) might perform a “superfreak pivot”, going from overt or covert climate change denial to acknowledging it and pointing to solar geoengineering as the only way to fix it. Consequently, these entities and individuals would no longer need to deny the climate is changing (which is becoming an increasingly difficult position to defend these days), while they could still push for business as usual (as in: *their* business as usual) and delay decarbonisation.

The quote from the book Has It Come to This: The Promises and Perils of Geoengineering on the Brink puts is brilliantly: “Subjectively and objectively, geoengineering is an extreme expression of a – perhaps *the* – paradox of capitalist modernity. The structures set up by people are perceived as immune to tinkering, but there is hardly any limit to how natural systems can be manipulated. The natural becomes plastic and contingent; the social becomes set in stone.”

The concept is carbon geoengineering is also very straightforward (conceptually): to remove excess carbon from the atmosphere. There are two rationales for carbon geoengineering:

- To offset carbon emissions that – for economic or technological reasons – cannot be eliminated (emissions from planes are one example that comes to mind). Those residual emissions will be balanced out by negative emissions resulting from geoengineering;

- To compensate for historical emissions.

The IPCC special report on 1.5°C states unambiguously that limiting global warming to 1.5°C needs to involve large-scale carbon removal from the atmosphere (“large-scale” being defined as 100 – 1,000 gigatons over the course of the 21st century). This, in my view, fundamentally differentiates carbon geoengineering from solar geoengineering in terms of politics and policy: the latter is more conceptual, a “nice to have if it works” lofty concept; the former is enshrined in climate policy plan from the leading scientific authority on climate change. This means that carbon capture is not a “nice to have”, it’s critical.

Carbon geoengineering is a goal that can be achieved using a variety of means: from natural (planting more trees, planting crops with roots trapping more carbon) to technological (carbon capture and storage). The problem is that it largely remains in the research phase, and is nowhere near deployment at scale (which, on carbon capture and storage side is akin to having a parallel energy infrastructure in reverse).

There is an elephant in the room that shows the limitations of geoengineering: the (im)permanence of the results. The effects of solar geoengineering are temporary, while carbon capture has its limits: natural (available land; be it for carbon-trapping vegetation or carbon capture plants), technological (capture and processing plants), and storage. Geoengineering could hopefully stave off the worst-case climate change scenarios, slow down the rate at which the planet is warming, and / or buy mankind a little bit of much-needed time to decarbonize the economy – but it’s not going to be a magic bullet.

Global AI Narratives – AI and Communism

Global AI Narratives – AI and Communism 07-May-2021

07-May-2021 saw the 18th overall (and the first one for me… as irony would have it, the entire event series slipped below my radar during the consecutive lockdowns) event in the Global AI Narratives (GAIN) series, co-organised by Cambridge University’s Leverhulme Centre for the Future of Intelligence (LCFI). Missing the first 17 presentations was definitely a downer, but the one I tuned in was *the* one: AI and Communism.

Being born and raised in a Communist (and subsequently post-Communist) country is a bit like music: you can talk about it all you want, but you can’t really know it unless you’ve experienced it (and I have). [Sidebar: as much as I respect everyone’s right to have an opinion and to voice it, I can’t help but cringe hearing Western-born 20- or 30-something year old proponents of Communism or Socialism, who have never experienced centrally planned economy, hyperinflation (or even good old-fashioned upper double-, lower triple-digit inflation), state-owned and controlled media, censorship, shortages of basic everyday goods, etc. etc. etc. I know that Capitalism is not exactly perfect, and maybe it’s time to come up with something better, but I don’t think that many Eastern Europeans would willingly surrender their EU passports, freedom of movement, freedom of speech etc. Then again, it *might* be different in the era of AI and Fully Automated Luxury Communism.]

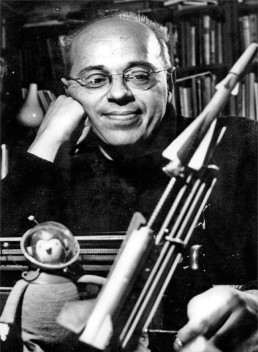

Stanisław Lem in 1966

The thing about Communism (and that’s speaking from limited and still-learning perspective) is that there was in fact much, much more to it than many people realise. We’re talking decades (how many decades exactly depends on individual country) and hundreds of millions of people, so that’s obviously a significant part of the history of the 20th century. The Iron Curtain held so tight that for many years the West was either missing out altogether or had disproportionately low exposure to culture, art, or science of the Eastern Bloc (basically everything that was not related to the Cold War). As the West was largely about competition (including competition for attention) there was limited demand for Communist exports because there wasn’t much of a void to fill. That doesn’t mean that there weren’t exciting ideas, philosophies, works of art, or technological inventions being created in the East.

The GAIN event focused on the fascinating intersection of philosophy, literature, and technology. It just so happens that one of the (world’s) most prolific Cold War-era thinkers on the topic of the future of technology and mankind in general was Polish. I’m referring to the late, great, there-will-never-be-anyone-like-him Stanislaw Lem (who deserved the Nobel Prize in Literature like there was no tomorrow – one could even say more than some of the Polish recipients thereof). Lem was a great many things: he was a prolific writer whose works span a very wide spectrum of sci-fi (almost always with a deep philosophical or existential layer), satire disguised as sci-fi, and lastly philosophy and technology proper (it was in one of his essays in the late 1990’s or early 2000’s I have first read of the concept of the brain-computer interface (BCI); I don’t know to what extent BCI was Lem’s original idea, but he was certainly one of its pioneers and early advocates). He continued writing until his death in 2006.

One of Lem’s foremost accomplishments is definitely 1964’s Summa Technologiae, a reference to Thomas Acquinas’ Summa Theologiae dating nearly seven centuries prior (1268 – 1273). Summa discusses technology’s ability to change the course of human civilisation (as well as the civilisation itself) through cybernetics, evolution (genetic engineering), and space travel. Summa was the sole topic of one of the GAIN event’s presentations, delivered by Bogna Konior, an Assistant Arts Professor at the Interactive Media Arts department of NYU Shanghai. Konior took Lem’s masterpiece out of its philosophical and technological “container” and looked at it from a wider perspective of the Polish social and political system Lem lived in – a system that was highly suspicious, discouraging (if not openly hostile) to new ways of thinking. She finds Lem pushing back against the political status quo.

While Bogna Konior discussed one of the masterpieces of a venerated sci-fi giant, the next speaker, Jędrzej Niklas, presented what may have sounded like sci-fi, but was in fact very real (or at least planned to happen for real). Niklas told the story of Poland’s National Information System (Krajowy System Informatyczny, KSI) and a (brief) eruption of “technoenthusiasm” in early 1970’s Poland. In a presentation that sounded at times more like alternative history than actual one Niklas reminded us of some of the visionary ideas developed in Poland around late 1960’s / early 1970’s. KSI was meant to be a lot of things:

- a central-control system of the economy and manufacturing (pls note that at that time vast majority of Polish enterprises were state-owned);

- a system of public administration (population register, state budgeting / taxation, natural resources management, academic information index and search engine);

- academic mainframe network;

- “Info-highway” – a broad data network for enterprises and individuals linking all major and mid-size cities.

If some or all of the above sound familiar, it’s because they all became everyday uses cases of the Internet. [sidebar: while we don’t / can’t / won’t know for sure, there have been some allegations that Polish ideas from the 1970’s were duly noted in the West; whether they became an inspiration to what ultimately became the Internet we will never know].

While KSI ultimately turned out to be too ambitious and intellectually threatening for the ruling Communist Party, it has not been a purely academic exercise. The population register part of KSI became the PESEL system (an equivalent of the US Social Security Number or British National Insurance Number), which is still in use today, while all the enterprises are indexed by the REGON system.

And just like that, the GAIN / LCFI event made us all aware how many ideas which have materialised (or are likely to materialise in the foreseeable future) may not have originated exclusively in the Western domain. I’m Polish, so my interest and focus are understandably on Poland, but I’m sure the same can be said by people in other, non-Western, parts of the world. While the GAIN / LCFI events have not been recorded in their entirety (which is a real shame), they will form a part of the forthcoming book “Imagining AI: how the world sees intelligent machines” (Oxford University Press). It’s definitely one to add to cart if you ask me.

____________________________________

1. I don’t think that any single work of Lem’s can be singled out as his ultimate masterpiece. His best-known work internationally is arguably Solaris, which had two cinematic adaptations (by Tarkovsky and by Soderbergh), which is equal parts sci-fi and philosophy. Summa Technologiae is probably his most venerated work in the technology circles, possible in the philosophy circles as well. The Star Diaries are likely his ultimate satirical accomplishment. Eden and Return from the Stars are regarded as his finest sci-fi works.

Knowing me, knowing you: theory of mind in AI

#articlesworthreading: Cuzzolin, Morelli, Cirstea, and Sahakian “Knowing me, knowing you: theory of mind in AI”

Depending on the source, it is estimated that some 30,000 – 40,000 peer-reviewed academic journals publish some 2,000,000 – 3,000,000 academic articles… per year. Just take a moment for this to sink in: a brand-new research article published (on average) every 10 – 15 seconds.

Only exceptionally, exceedingly rarely does an academic article make its way into the Zeitgeist (off the top of my head I can think of one); countless articles fade into obscurity having been read only by the authors, their loved ones, journal reviewers, editors, and no one else ever (am I speaking from experience? Most likely). I sometimes wonder how much truly world-changing research is out there, collecting (digital) dust, forgotten by (nearly) everyone.

I have no ambition of turning my blog into a recommender RSS feed, but every now and then, when I come across something truly valuable, I’d like to share it. Such was the case with “theory of mind in AI” article (disclosure: Prof. Cuzzolin is my PhD co-supervisor, while Prof. Sahakian is my PhD supervisor).

Published in Psychological Medicine, the article encourages approaching Artificial Intelligence (AI), and more specifically Reinforcement Learning (RL) from the perspective of hot cognition. Hot and cold cognition are concepts somewhat similar to very well-known concepts of thinking fast and slow, popularised by the Nobel Prize winner Daniel Kahneman in his 2014 bestseller. While thinking fast focuses on heuristics, biases, and mental shortcuts (vs. fully analytical thinking slow), hot cognition is describing thinking that is influenced by emotions. Arguably, a great deal of human cognition is hot rather than cold. Theory of Mind (ToM) is a major component of social cognition which allows us to infer mental states of other people.

By contrast, AI development to date has been overwhelmingly focused on purely analytical inferences based on vast amounts of training data. The inferences are not the problem per se – in fact, they do have a very important place in AI. The problem is what the authors refer to as “naïve pattern recognition incapable of producing accurate predictions of complex and spontaneous human behaviours”, i.e. the “cold” way these inferences were made. The arguments for incorporating hot cognition, and specifically ToM are entirely pragmatic and include improved safety of autonomous vehicles, more and better applications in healthcare (particularly in psychiatry), and likely a substantial improvement in many AI systems dealing directly with humans or operating in human environments; ultimately leading to AI that could be more ethical and more trustworthy (potentially also more explainable).

Whilst the argument in favour of incorporating ToM in AI makes perfect sense, the authors are very realistic in noting limited research done in this field to date. Instead of getting discouraged by it, they put forth a couple of broad, tangible, and actionable recommendations on how one could get to Machine Theory of Mind whilst harnessing existing RL approaches. The authors are also very realistic in challenges the development of Machine ToM will likely face, such as empirical validation (which will require mental state annotations to learning data to compare them with the mental states inferred by AI) or performance measurement.

I was very fortunate to attend one of co-author’s (Bogdan-Ionut Cirstea) presentation in 2020, which was a heavily expanded companion piece to the article. There was a brilliant PowerPoint presentation which dove into the AI considerations raised in the article in much greater detail. I don’t know whether Dr. Cirstea is at liberty to share it, but for those interested in further reading it would probably be worthwhile to ask.

You can find the complete article here; it is freely available under Open Access license.

Lord Martin Rees on existential risk

25-Sep-2020

The Mar-2017 cover story of Wired magazine (UK) was catastrophic / existential risks (one of their Top 10 risks was global pandemic, so Wired has certainly delivered in the future-forecasting department). It featured, among other esteemed research centres (such as Cambridge University’s Leverhulme Centre for the Future of Intelligence or Oxford’s Future of Humanity Institute), Centre for the Study of Existential Risk (like LCFI, also based in Cambridge).

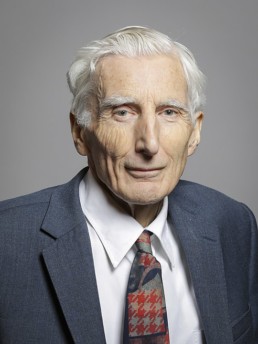

CSER was co-founded by Lord Martin Rees, who is one person I can think of to genuinely deserve this kind of title (I’m otherwise rather Leftist in my views and I’m all for merit-based titles and distinctions – and *only* those). Martin Rees is practically a household name in the UK (I think he’s UK’s second most recognisable scientist after Sir David Attenborough), where he is the Astronomer Royal, the former Master of Trinity College in Cambridge, and the former President of the Royal Society (to name but a few). Lord Rees has for many years been an indefatigable science communicator, and his passion and enthusiasm for science are better witnessed than described. Speaking from personal observation, he also comes across as an exceptional individual on a personal level.

Fun fact: among his too-many-to-list honours and awards one will not find the Nobel Prize, which is… disappointing (not as regards Lord Rees, but as regards the Nobel Prize committee). One will however find a far lesser-know Order of Merit (OM). The number of living Nobel Prize recipients is not capped and stands at about 120 worldwide. By comparison, there can be only 24 living OMs at any one time. Though lesser known, the OM is widely regarded as one of the most prestigious honours in the world.

By Roger Harris – https://members-api.parliament.uk/api/Members/3751/Portrait?cropType=ThreeFourGallery: https://members.parliament.uk/member/3751/portrait, CC BY 3.0, https://commons.wikimedia.org/w/index.php?curid=86636167

Lord Rees has authored hundreds of academic articles and a number of books. His most recent book is On the Future, published in 2018. In Sep-2020 Lord Rees delivered a lecture on existential risk as part of a larger event titled “Future pandemics” held at the Isaac Newton Institute for Mathematical Sciences in Cambridge. The lecture was very much thematically aligned with his latest book, but it was far from the typical “book launch / promo / tie-in” presentation I have attended more than once in the past. The depth, breadth, vision, and intellectual audacity of the lecture were nothing short of mind-blowing – it was akin to a full-time postgraduate course compressed into one hour. Interestingly, the lecture was very “open-ended” in terms of the many technologies, threats, and visions Lord Rees has covered. Oftentimes similar lectures focus on a specific vision or prediction and try to sell it to the audience wholesale; with Lord Rees it was more of a roulette of possible events and outcomes: some of them terrifying, some of them exciting, and some of them unlikely.

The lecture started with the familiar trends of population growth and shifting “demographic power” from the West to Asia and Africa (the latter likely to experience the most pronounced population growth throughout 21st century), followed by the climate emergency. On the climate topic Lord Rees stressed the urgency for green energy sources, but he seemed a bit more realistic than many in terms of attainability of that goal on a mass scale. Consequently, he introduced and discussed Plan B, i.e. geoengineering. If “geoengineering” sounds somewhat familiar, it is likely because of a high-profile planned Harvard University experiment publicised by the MIT Tech Review . . . which was subsequently indefinitely halted. I was personally very supportive of the Harvard experiment as one possible bridge between the targets of the Paris Accord and the reality of the climate situation on the ground. Lord Rees then discussed the threat of biotechnology and casual biohacking / bioengineering, citing as an example a Dutch experiment of weaponizing the flu virus to make it more virulent. The lecture really got into gear from then onwards, as Lord Rees began pondering the future of evolution itself (the evolution of evolution so to speak), where traditional Darwinian evolution may be replaced or complemented (and certainly accelerated by orders of magnitude) by man-powered genetic engineering (CRISPR-Cas9 and alike) and / or ultimate evolution of intelligent life into synthetic forms, which leads to fundamental and existential questions of what will be the future definition of life, intelligence, or personal identity. [N.B. These discussions are being had on many levels by many of the finest (and less-than-finest) minds of our time. I am not competent to provide even a cursory list of resources, but I can offer two of my personal favourites from the realm of sci-fi: the late, great Polish sci-fi visionary Stanislaw Lem humorously tackled the topic of bioengineering in Star Diaries (Voyage Twenty-One) while Greg Egan has taken on the topic of (post)human evolution in the amazing short story “Wang’s carpets”, further expanded into a full-length novel Diaspora.]

From life on Earth Lord Rees moves on to life outside Earth, disagreeing with the idea of other planets being humanity’s “Plan B” and touching (but not expanding) on the topic of extraterrestrial life. The truly cosmic in scope a lecture ended with a much more down-to-Earth call for political action as well as (interestingly) action from religions and religious leaders on the environmental front.

Humanity+ Festival 07/08-Jul-2020: David Brin on the future

Wed 28-Oct-2020

Continuing from the previous post on UBI, I would like to delve into another brilliant presentation from the Humanity+ Festival in Jul-2020: David Brin on life and work in the near future.

For those of you to whom the names rings somewhat familiar, David is the author of an acclaimed sci-fi novel “the postman”, adapted into a major box office bomb starring Kevin Costner (how does he keep getting work? Don’t get me wrong, I liked “dances with wolves” and I loved “a perfect world”, but commercially this guy is a near-guarantee of a box-office disaster…). Lesser known is David’s career in science (including an ongoing collaboration with NASA’s innovation division), based on his background in applied physics and space science. A combination of the two, topped with David’s humour and charisma, made for a brilliant presentation.

Brin’s presentation covered a lot of ground. There hasn’t necessarily been a main thread or common theme – it was just broadly defined future, with its many alluring promises and many very imaginative threats.

One of the main themes was that of feudalism, which figures largely in Polish sociological discourse (and Polish psyche), so it is a topic of close personal interest to me. When David started, I assumed (as would everyone) that he was talking in past tense, as something that is clearly and obviously over – but no! Brin argues (and on second thought it’s hard to disagree) that we not only live in very much feudal world at present, but that it is the very thing standing in the way of the (neoliberal) dream of free competition. Only when there are no unfair advantages, argues Brin, can everyone’s potential fully develop, and everyone can *properly* compete. I have to say, for someone who has had some, but not many advantages in life, that concept is… immediately alluring. Then again, the “levellers” Brin is referring to (nutrition, health, and education for all) are not exactly the moon and stars – I have benefitted from all of them throughout my life (albeit, not always free of charge – my university tuition fees are north of GBP 70k alone).

The next theme Brin discusses (extensively!) is near-term human augmentation. And for someone as self-improvement-obsessed as myself, there are few topics that guarantee my full(er) and (more) undivided attention. Brin lists such enhancements as:

- Pharmacological enhancements (nootropics and alike);

- Prosthetics;

- Cyber-neuro links: enhancements for our sensory perceptions;

- Biological computing (as in: intracellular biological computing; unfortunately Brin doesn’t get into the details of how exactly it would benefit or augment a person);

- Lifespan extension – Brin makes an interesting point here; he argues that any extensions that worked so impressively in fruit flies or lab rats fail to translate to results in humans, leading to the conclusion that there may not be any low-hanging fruit in lifespan extension department (though, arguably, proper nutrition and healthcare could be argued as those low-hanging fruit of human lifespan extension).

Moving on to AI, Brin comes up with a plausible near-future scenario of an AI-powered “avatarised” chatbot, which would claim to be a sentient, disembodied, female AI running away from the creators who want to shut it down. Said chatbot would need help – namely financial help. I foresee some limitations of scale to this idea (the bot would need to maintain the illusion of intimacy and uniqueness of the encounter with its “white knight”), but other than that, it’s a chillingly simple idea. It could be more effective than openly hostile ransomware, whilst in principle not being much different from it. That idea is chilling, but it’s also exactly what one would expect from a sci-fi writer with a background in science. It is also just one idea – the creativity of rogue agents could go well beyond that.

David’s presentation could have benefitted from slightly clearer structure and an extra 20 minutes running time, but it was still great as it was. Feudalism, human augmentation, and creatively rogue AI may not have much of a common thread except one – they are all topics of great interest to me. I think the presentation also shows how important sci-fi literature (and its authors – particularly those with scientific background) can be in extrapolating (if not shaping) near-term future of mankind. It also proves (to me, at least), how important it is to have plurality and diversity of voice participating in the discourse of the future. Until recently I would see parallel threads: scientists were sharing their views and ideas, sci-fi writers theirs, politicians theirs – and most of them would be the proverbial socially-privileged middle-aged white men. I see some positive change happening, and I hope it continues. It has to be said that futurist / transhumanist movements seem to be in the vanguard of this change: all their events I attended have had a wonderfully diverse group of speakers.

#articlesworthreading: Tyagi et al "A Randomized Trial Directly Comparing Ventral Capsule and Anteromedial Subthalamic Nucleus Stimulation in Obsessive-Compulsive Disorder: Clinical and Imaging Evidence for Dissociable Effects”

Tue 01-September-2020

“Biological psychiatry” is a journal I’m not very likely to cross paths with (I’m more of “expert systems with applications” kind of guy). The only reason I came across the article and the incredible work it describes is because my PhD supervisor, the esteemed Prof. Barbara Sahakian, was one of the contributors and co-authors.

Even if somebody pointed me directly to the article, I wouldn’t be able to appreciate the entirety of the underlying work. First and foremost due to lack of any medical/neuroscientific subject matter expertise, but also because this incredible work is described so modestly and matter-of-factly that one may easily miss its significance, which is quite enormous. A team of world-class (and I mean: WORLD-CLASS) scientists planted electrodes in the brains of six patients suffering from severe (and I mean: SEVERE; debilitating) obsessive-compulsive disorder (OCD). The results were nothing short of astonishing…

The experiment examined the impact of direct brain stimulation (DBS) on patients with treatment-resistant cases of OCD. I have mild OCD myself, but it’s really mild – it’s just being slightly over the top with to do lists and turning off the stove (the latter not being much of a concern given that I leave my place roughly once a fortnight these days). I tend to think that it’s less of an issue and more of an integral part of my personality. It did bother me more when I was in my early 20’s and I briefly took some medication to alleviate it. The meds took care of my OCD, and of everything else as well. I became a carefree vegetable (not to mention some deeply unwelcome side-effects unique to the male kind). Soon afterwards I concluded my OCD is not so bad considering. However mild my own OCD is, I can empathise with people experiencing it in much more severe forms, and the six patients who participated in the study have been experiencing debilitating, super-severe (and, frankly, heartbreaking) cases of treatment-resistant OCD.

DBS was a new term to me, but conceptually it sounded vaguely similar to BCI (brain / computer interface) and even more similar to TDCS (trans-cranial direct current stimulation). Wikipedia explains that DBS is “is a neurosurgical procedure involving the placement of a medical device called a neurostimulator (sometimes referred to as a “brain pacemaker”), which sends electrical impulses, through implanted electrodes, to specific targets in the brain (brain nuclei) for the treatment of movement disorders, including Parkinson’s disease, essential tremor, and dystonia. While its underlying principles and mechanisms are not fully understood, DBS directly changes brain activity in a controlled manner”. That sounds pretty amazing as it is, though the researchers in this particular instance were using DBS for a non-movement disorder (definition from the world-famous Mayo clinic is broader and does mention DBS being used for treatment of OCD).

Some (many) of the medical technicalities of the experiment were above my intellectual paygrade, but I understood just enough to appreciate its significance. Six patients underwent general anaesthesia surgery (in simple terms: they had holes burred in their skulls), during which electrodes were implanted into 2 target areas in order to verify how each of them will respond to stimulation in a double-blind setting. What mattered to me was whether *either* stimulations would lead to improvement – and they did; boy, did they improve…! The Y-BOCS scores (which are used to measure and define clinical severity of OCD in adults) plummeted like restaurants’ income during COVID-19 lockdown: in the optimum stimulation settings + Cognitive Behavioural Therapy (CBT) combo, the average reduction in y-COBS was an astonishing 73.8%; one person’s score went down by 95%, another one’s by 100%. The technical and modest language of the article doesn’t include a comment allegedly made by one of the patients post-surgery: “it was like a flip of a switch” – but that’s what the results are saying for those two patients (the remaining four ranged between 38% and 82% reduction).

There is something to be said about the article itself. First of all, this epic, multi-researcher, multi-patient, exceptionally complex and sensitive study is captured in full on 8 pages. I can barely say “hello” in under 10 pages… The modest and somewhat anticlimactic language of the article is understandable (this is how formulaic, rigidly structured academic writing works whether I like it or not [I don’t!]), but at the same time is not giving sufficient credit to the significance of the results. Quite often I’d come across something in an academic (or even borderline popular science) journal that should be headline news on BBC or Sky News, and yet it isn’t (sidebar: there was one time, literally one time in my adult life that I can recall a science story being front-page news – it was the discovery of the Higgs Boson around 2012). Professor Steve Fuller really triggered me with his presentation at TransVision 2019 festival (“trans” as in “transhumanism”, not gender) when he mentioned that everyone in academia is busy writing articles and hardly anyone is actually reading them. I wonder how many people who should know of the Tyagi study (fellow researchers, grant approvers, donors, pharma and life sciences corporations, medical authorities, OCD patients etc.) actually do. I also wonder how many connections between seemingly-unrelated research are waiting to be uncovered and how many brilliant theories and discoveries have been published once in some obscure journal (or even not published at all) and have basically faded into scientific oblivion. I’m not saying this is the fate of this particular article (“Biological Psychiatry” is a highly-esteemed journal with impact factor placing it around top 10 of psychiatry and neuroscience publications, and it’s been around since late-1950’s), but still, this research gives hope to so many OCD sufferers (and potentially also depression sufferers and addicts, as per the Mayo clinic, so literally millions of people) that it should have been headline news on the BBC – but it wasn’t…