“Devs” series review

Wed 06-May-2020

I don’t watch TV (I’m using the term loosely and inclusively, i.e. including Netflixes and Amazon Prime Videos of this world) much, and when I do, I’m usually so tired that it’s either Family Guy, or an umpteenth rerun of one of my go-to masterpieces: Aliens, The Matrix, The Matrix Reloaded, Alien: Resurrection, Resident Evil, Terminator 2, Total Recall, Contagion, Predator, Basic Instinct, Batman, Batman Returns, Dark Knight Rising – I think you get the idea. I try to make an effort to watch the more ambitious fare, but the truth is that with full-time work, studies, FOMO, and casual depression there isn’t always enough glucose in the brain to concentrate on something new and challenging. When there is, there is sometimes a great prize, a grand prix (most recently: Lee Chang-dong’s Burning… Jesus Marimba, what a movie…!). Last weekend, spurred by word of mouth, I watched Alex Garland’s (of Ex Machina and Annihilation fame) latest creation Devs.

While Dev’s production budget is impossible to come by on the Internet, I’m guessing low- to mid-eight figure (my working guess is USD 20m – 30m range; I’d be heavily surprised it were a single penny over USD 50m). The show isn’t as visually blockbusting as Westworld (and let’s be clear: the two will forever be mentioned and compared on one breath, for a number of reasons: artistic ambitions, philosophical under(or over-)tones, auteur aesthetic, focus on cutting-edge technologies, and airing at the exact same time [Devs vs. Westworld season 3] – lastly, a painful lack of any humour or irony, as both shows take themselves oh so very seriously).

Entertainment – quality entertainment – Is often revealing a lot about the collective mindset at the time of the release (aka zeitgeist). There are, of course exceptions: masterpieces such as 2001: A Space Odyssey or Solaris (Tarkovsky’s, not Soderbergh’s!) didn’t provoke fears of sentient AI back in the 1960’s or strange alien worlds in the 1970’s respectively; they were visionary works either untethered from the everyday experience, or decades ahead of their time. In recent years we could see how transhumanism became mainstream in (wildly uneven) productions such as Transcendence (can you spot the cameo of Wired magazine in one of the scenes? It’s the best acting performance of the entire movie), Limitless (which made 6x its budget), or Lucy (which made 11x its budget) – not to mention genre-defining classics such as Ghost in the Shell (The 1995 one! Not the Scarlett Johansson trainwreck) or the Matrix trilogy. Transhumanism has enormous cinematic potential, which has been nowhere near fully utilised; it has, however, had some less-than-ideal timing, because we still haven’t advanced anything like Lucy’s CPH4 or Limitless’ NZT-48 on the nootropics side, nor brain-computer interfaces (BCI’s) from the Matrix, cortical stacks from Altered Carbon, or cyberbrains and synthetic “shells” from Ghost in the Shell. With transhumanism still awaiting its breakthroughs, AI provided more than was required to fully capture mass imagination in recent years (Ex Machina, Westworld, Her, Humans). Devs are going a step further: to the fascinating world of quantum computing.

It’s a challenge to clearly define what genre Devs actually belongs to. It’s not science-fiction nor a technothriller, because technology – while absolutely central to the story – is not the story itself (unlike, for example, HAL in 2001). It’s not a murder mystery nor a crime thriller, because we see the murder in the first 15 minutes of the first episode, we see who killed – alas, we don’t know why they killed. The entire storyline of the series is all about understanding this seemingly absurd, unnerving “why”. The series is closest to philosophical and existential drama. It doesn’t feature red herrings or parallel timelines, and all characters, props, and plot devices appear for a reason, which makes for a refreshing break from oft-overengineered narratives of modern-day dramas. It is as close to minimalist as possible for an FX production. It is also highly stylised, beautiful, and high on artistic and technical merits.

Cinematography in Dev is an absolute delight; a technical and artistic tour de force (if Devs doesn’t get a clean sweep of the technical and art direction Emmy’s and Golden Globes, that’s it, I’m giving up on mankind). The cinematography is crisp and dreamlike at the same time, it has an incredible hyperreal feel. It is also rife in references – and as is often the case with not-so-overt ones, one wonders “is this an actual reference, or am I just inventing one?”. Night-time aerial sequences of LA seem like a clear reference to (original) Blade Runner’s visionary imagery, while daytime sequences share a degree of Sun saturation with Dredd (an underrated masterpiece Alex Garland wrote and executive-produced). The Devs unit design is homage to Vincenzo Natali’s Cube, there’s no way it’s accidental, while its brighter-than-sun lighting evokes 2007 Sunshine (written by Garland). The technical quality of the cinematography shines brightest in razor-sharp, vivid, saturated night shots – which makes one contemplate the incredible technological leap of the past 2 decades; just see one of big-budget Hollywood productions night shots from late 90’s (off the top of my head: Interview with the Vampire) to see how the technology has progressed.

Music is at times perhaps too arthouse, but it is clearly a part of the artistic vision, it’s a standout character, not a backdrop – and most of the time, it works oh so well. Once again, one can’t help but draw comparisons with Vangelis’ groundbreaking Blade Runner score, or the equally groundbreaking selection of music for 2001. On the intelligent ambient electronica side, Cristobal Tapia de Veer arresting score for Channel 4’s underrated masterpiece Utopia or Cliff Martinez’s score for Steven Soderbergh’s Contagion come to mind. Westworld’s soundtrack by Ramin Djawadi is nothing to sneeze at (especially the beautifully bittersweet Sweetwater), but it’s clearly illustration music. Devs seem closer to Brian Retizell’s avant-garde experimentations on Hannibal or subliminal ambient background to David Lynch’s 2017 Twin Peaks revival (sound-designed by David Lynch himself).

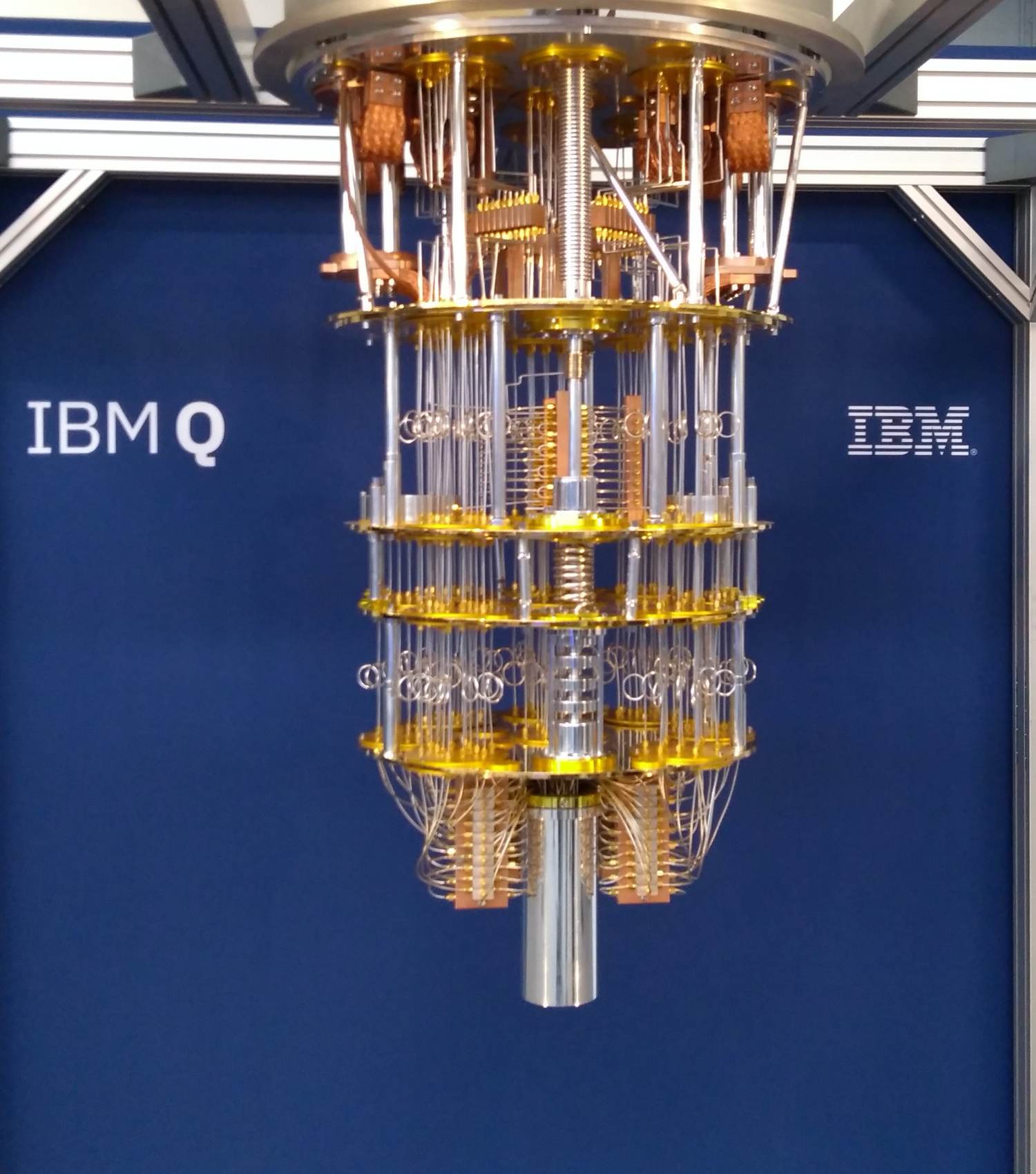

Art direction is interesting, though not at par with music or cinematography. The LA apartments of the protagonists are pretty basic (although we get the not-so-subtle message “even these basic apartments are nowadays affordable for the technocratic elite only”). The Devs’ “floating cube” is, by contrast, intentionally (?) over-the-top, while the Amaya statue towering over the campus is quite creepy, likely meant as a warning against not letting go. The way New York City was the fifth character on Sex and the City, in Devs it’s the quantum computer – which is either a carbon copy of IBM Q System One (I’ve seen it up close – a mock-up, of course, not the real, super-cooled thing), or it is actually the exact mock-up I saw (the one in Devs has some moving parts though… I’m not sure the IBM / D-Wave / Rigetti systems have any of those…). To a regular viewer it may look completely and utterly over the top, like an elongated baroque candelabra put on the floor – the weird thing is, this is exactly what a modern-day quantum computer looks like; there’s no OTT here – that’s scientific accuracy.

Art direction is interesting, though not at par with music or cinematography. The LA apartments of the protagonists are pretty basic (although we get the not-so-subtle message “even these basic apartments are nowadays affordable for the technocratic elite only”). The Devs’ “floating cube” is, by contrast, intentionally (?) over-the-top, while the Amaya statue towering over the campus is quite creepy, likely meant as a warning against not letting go. The way New York City was the fifth character on Sex and the City, in Devs it’s the quantum computer – which is either a carbon copy of IBM Q System One (I’ve seen it up close – a mock-up, of course, not the real, super-cooled thing), or it is actually the exact mock-up I saw (the one in Devs has some moving parts though… I’m not sure the IBM / D-Wave / Rigetti systems have any of those…). To a regular viewer it may look completely and utterly over the top, like an elongated baroque candelabra put on the floor – the weird thing is, this is exactly what a modern-day quantum computer looks like; there’s no OTT here – that’s scientific accuracy.

It doesn’t often happen that all elements click into place to create perfection (examples in recent years: American Horror Story season 1; Hannibal; Killing Eve, Fleabag; Twin Peaks: the Return), and unfortunately Devs aren’t quite so lucky. All its artistic and philosophical heft is undermined – painfully! – by terribly miscast leads. Some TV shows (again, using the term loosely) are cast pitch-perfect, the cast makes these shows (cases in point: Killing Eve; Hannibal; seasons 1, 2, and 5 of American Horror Story; Pose; characters of Dolores and Maeve in Westworld; Olive Kitteridge; Transparent). Given the budgets that go into modern series production, and the enormous stakes (as the shows become flagships for their platforms / networks and fight to compete in increasingly saturated marketplace) casting can make or break a show. The lead cast of Devs doesn’t quite break the show, but it’s not for the lack of trying.

Sonoya Mizuno as Lily is. a. disaster. She’s got an intriguing, androgynous appeal, but – looking at a broad and subjective spectrum of similarly intriguing actresses – she doesn’t have the sex-appeal of Tao Okamoto or Ève Salvail, the talent of Rinko Kikuchi or Saoirse Ronan, nor the charisma of Carrie-Ann Moss or Chiaki Kuriyama. We know that Alex Garland can direct its actors well (after all, Ex Machina propelled Alicia Vikander to Hollywood’s A-list; she got an Oscar a year later for The Danish Girl), so the fault is likely with Mizuno. She carries Lily with the depth of an emo teenager; her acting is flat, her emotional range is limited. I felt like crying during her crying scene, but it wasn’t tears of empathy – it was cringe-cry. Nick Offerman’s Forest takes the blank-stare dead eyes so far over the top that it’s at times almost comical. Karl Glusman – an otherwise talented actor – does Russian accent… poorly – and that’s his only contribution. Alison Pill also went over-the-top with the “brooding quantum physics genius”, doubling down on blank stare and mistaking flatness for depth – she simply isn’t convincing as a scientist. Relative newcomer Jin Ha as Jamie is the only casting bet that pays off (and just to be clear: one doesn’t need a cast of Hollywood A-listers to make a good series – just look at Succession). His character doesn’t get that much air time, but still comes across as a fully-fleshed character. He’s also refreshingly sensitive and vulnerable, which are not qualities we get to see in male protagonists very often yet. He’s nowhere near hypermasculine, he’s actually really slim-built, even slightly androgynous (androgyny is a major theme in Devs: Lily, Jamie, Lyndon – it almost seems like a message: “the intellectual / technocratic elite is above the archaic gender-binary”). Zach Grenier’s is the only other half-decent performance, with redeeming flashes of irony. No Emmy’s here. No Golden Globes.

Devs’ is a living proof that powerful vision is enough to create compelling, fascinating, binge-worthy TV. It does so without a plethora of international locations (I’m looking at you, Westworld!), an abundance of CGI (I’m still looking at you, Westworld), or elaborate purpose-built sets (my eyes still on you, Westworld). Devs has precisely one blockbusting scene (the motorway sequence with Lily and Kenton). By the very virtue of its location, it evokes Bullitt, otherwise, it is reminiscent of that motorway scene in Matrix Reloaded (obviously in a much more demure fashion – the Matrix Reloaded sequence was a stroke of cinematic genius, down to the Juno Reactor track).

As far as philosophy and technology are concerned… well, the latter is approached loosely… really loosely. It’s more of a mere notion of quantum computing than any actual science. It’s kind of the vaguest and loosest level of technological reference possible – but, judging by the reactions, it was enough to strike a chord. The reason may be two-fold: the artistic and entertainment merits of Devs, and perfect timing, as “quantum” becomes the hot new buzzword. Philosophy is more of a meditation and exploration – there are few (if any) answers or directions. It may, in fact, be one of the major strengths of Devs: it ponders and asks questions without fortune cookie-quality one-liner bits of wisdom (“There is ugliness in this world. Disarray. I choose to see beauty” – look me in the eye, Westworld…!). Westworld takes on sentience and consciousness; Devs takes on free will and determinism. It’s interesting (and impressive) how Devs gently manages to manoeuvre around the topic of religion and comes across as agnostic rather than explicitly atheistic.

Devs is a show one can describe as a “small masterpiece”. It’s slow-burning, low-key, with stunning aesthetics though no blockbusting qualities proper. The characters are not at all overtly sexy or sexual (in fact they come across somewhat asexual, even though we know they do, actually, have sex and enjoy it). There are no heists, explosions, or enormous amounts of money (money is approached a bit like sex in Devs – we know it exists, and in great amounts, but it’s almost a non-factor for the plot). One could say that not very much is happening in Devs altogether, and they wouldn’t necessarily be wrong. But somewhere between bold artistic vision, philosophical questions, stunning visuals, and outstanding music there is a true work of art: ambiguous, intriguing, thought-provoking, and truly beautiful. I’ll treasure it all the more knowing full-well how unlikely I am to see anything of remotely similar calibre anytime soon.

PS. Is it just me, or does it look like the scene of meeting Lily and Jamie was filmed on the non-existent ground floor of Exchange House in Primrose Street in London?

The Polish AI landscape

Wed 29-Apr-2020

Countries most synonymous with “AI powerhouses” are without a doubt the US and China. Both have economies of scale, resources, and strategic (not just business) interests in being at the forefront of AI. EU as a whole would probably come third, although there is always a degree of subjectivity in these rankingsi. The UK would probably come next (owing to Demis Hassabis and DeepMind, as well as thriving scientific and academic communities). In any case, it’s rather unlikely that Poland would be listed in the top tier. Poland is known for being an ideal place to set up corporate back- or (less frequently) middle office functions: much cheaper than Western Europe, with huge pool of well-educated talent, in the same time zone as the rest of the EU. A great alternative (or complement) to setting up a campus in India, but not exactly a major player in AI research and entrepreneurship. Plus, Poland and its young democracy (dating back to 1989) are currently going through a bit of a social, identity, and political rough patch. Not usually a catalyst or enabler of cutting-edge technology.

And despite all that (and despite being a mid-sized country at best… 38 million people; and despite being global number #70 globally in GDP per capita in 2018 out of 239 countries and territoriesii) for some mysterious reason Poland still made it to #15 globally in AI (using total number of companies as a metric) according to China Academy of Information and Communications Technology (CAICT) Data Research Centre Global Artificial Intelligence Industry Data Reportiii as kindly translatediv by my fellow academic Jeffrey Ding from Oxford University (whose ChinAI newsletter is brilliant and I encourage everyone to subscribe and read). I found this news so unexpected that it was the inspiration behind the entire post below.

The recent (2019) Map of the Polish AI from Digital Poland Foundation reveals a vibrant, entrepreneurial ecosystem with a number of interesting characteristics. The official Polish AI Development Policy 2019 – 2027 released around the same time by a multidisciplinary team working across a number of government ministries paints a picture of impressive ambitions, though experts have questioned their realism.

The recent (2019) Map of the Polish AI from Digital Poland Foundation reveals a vibrant, entrepreneurial ecosystem with a number of interesting characteristics. The official Polish AI Development Policy 2019 – 2027 released around the same time by a multidisciplinary team working across a number of government ministries paints a picture of impressive ambitions, though experts have questioned their realism.

The Polish AI scene is very young (50% of the 160 organisations polled introduced AI-based services in 2017 or 2018, the most recent years in the survey). Warsaw (unsurprisingly) steals the top spot, with 85% of all companies being located in one of the 6 major metropolitan areas. The companies tend to be small: only 22% have more than 50 people; 59% have 20 or fewer. Let’s not conflate company headcount with AI teams proper – over 50% of the companies surveyed have AI teams of 5 employees or fewer. Shortage of talent is a truly global theme in AI (which I personally don’t fully agree with – companies with resources to offer competitive packages [sometimes affectionately referred to as “basketball player salaries”] have no shortage of candidates; whether this level of pay is justifiable [the very short-lived bonanza for iOS app developers circa 2008 comes to mind] and fair to the smaller players is a different matter). The additional challenge in Poland is that Polish salaries simply cannot compete with what is on offer within 3 hours’ flight – many talented computer scientists are naturally tempted to move to places like Berlin, Paris, London or other major European AI hubs where there are more opportunities, more developed AI ecosystems, and much, much better money to be made.

What stands out is the ultra-close connection between business and academic communities. While the same is the case in most countries seriously developing AI, some of them are home to global tech corporates whose financial resources and thus R&D capabilities give them the luxury to develop on their own, at par (if not ahead) of leading research institutions. These corporates’ resources also enable them to poach world-class talent (e.g. Google hiring John Martinis to lead their quantum computer efforts [who has since left…], Facebook appointing Yann LeCun as head of AI research, or Google Cloud poaching [albeit briefly] Fei-Fei Li as their Chief Scientist of AI/ML). In Poland this will not apply – it does not have any large (or even mid-size) home-grown innovative tech firms. The ultra-close connection between business and academia is a logical consequence of these factors – plus in a 38-million-strong country with relatively few major cities serving as business and academic hubs, the entire ecosystem simply can’t be very populous.

The start-up scene might in part be constrained by limited amount of available funds (anecdotally the angel investor / VC scene in Poland is very modest). However, the Digital Poland report states:

Categorically, as experts point out, the main barrier to the development of the Polish AI sector is not the absence of funding or expertise but rather a very limited demand for solutions based on AI.

My personal contacts echo this conclusion – they are not that worried about funding. Anecdotally, there is a huge pool of state grants (NCiBR) with limited competition for those (although post-COVID-19 they may all but evaporate).

Multiple experts cited by Digital Poland all list domestic demand as the primary concern. According to the survey, potential local clients simply do not understand the technology well enough to realise how it can benefit them (41% of responses in a multiple choice questionnaire – single highest cause; [client] staff not understanding AI had its own mention at 23% and [managers] not understanding AI came at 22%).

The AI market in Poland is focused on more commercial products (Big Data analytics, sales, analytics) rather than cutting-edge innovative research. It is understandable – in an ecosystem of limited size with very limited local demand the start-ups’ decision to develop more established, monetisable, applications which can be sold to a broad pool of global clients is a reasonable business strategy.

One side-conclusion I found really interesting is that there’s quite a vibrant conference and meetup scene given how nascent and “unsolidified” the AI ecosystem is.

The Polish AI Policy document is an interesting complement to the Digital Poland report. While the latter is a thoroughly researched snapshot of the Polish AI market right here right now (2019 to be exact), the policy document is more of a mission statement – a mission of impressive ambitions. I always support bold, ambitious, and audacious thinking – but experience has taught me to curb my enthusiasm as far as Polish policy-making is concerned. Grand visions of the 2019 – 2027 come with not even a draft of a roadmap. The document is also, unfortunately, quite pompous and vacuous at times.

The report is rightly concerned about impact on jobs, concluding that the expectation is that more jobs will be created than lost, and concluding that some of this surplus should benefit Poland. One characteristic of Polish economy is that it (still) has a substantial number of state-owned enterprises in key industries (banking, petrochemicals, insurance, mining and metallurgy, civil aviation, defence), which are among the largest in their industries on a national scale. Those companies have the size and the valid business cases for AI, yet they don’t seem ready (from education and risk-appetite perspectives) to commit to AI. State-level policy could provide the nudge (if not outright push) towards AI and emerging technologies, yet, unfortunately, that is not happening.

The report rightly acknowledges the skills gap, as well as some issues on the education side (dwindling PhD rates, relatively low (still!) level of interest in AI among Polish students as measured by thesis subject choices). The quality of Polish universities merits its own article (merits its own research, in fact). On one hand, the anecdotal and first-hand experiences lead me to believe that Polish computer scientists are absolutely top-notch, on the other, the university rankings are… unforgiving (there are literally two Polish universities on QS Global 500 list 2020, at positions #338 and #349v).

Last but not least, a couple of Polish AI companies I like (selection entirely subjective):

- Sigmoidal – AI business/management consultancy.

- Edward.ai – AI-aided sales and customer relationship management (CRM) solutions.

- Fingerprints.digital – behavioural biometrics solutions.

Disclaimer: I have no affiliations with any of the abovementioned companies.

[i] Are we looking at corporate research spending? Government funding/grants for academia? Absolute amounts or % of GDP? How reliable are the figures and how consistent are they between different states? etc. etc.

[ii] Source: World Bank (https://data.worldbank.org/indicator/NY.GDP.PCAP.CD?most_recent_value_desc=true)

[iii] You can read the original Chinese version here: http://www.caict.ac.cn/kxyj/qwfb/qwsj/201905/P020190523542892859794.pdf

[iv] Jeff’s English translation can be found here: https://docs.google.com/document/d/15WwZJayzXDvDo_g-Rp-F0cnFKJj8OwrqP00bGFFB5_E/edit#heading=h.fe4k2n4df0ef

[v] https://www.topuniversities.com/university-rankings/world-university-rankings/2020

Nerd Nite London – AI to the rescue! How Artificial Intelligence can help combat money laundering

15-Apr-2020

In April 2020, in the apex of the UK lockdown, I had the pleasure of being one of three presenters at online edition of Nerd Nite London. Nerd Nite is a wildly popular global meetup series, with multiple regional chapters. Each chapter is run by volunteers, and the proceeds from ticket sales (after costs) go to local charities. In this sense, lockdown did us an odd favour: normally Nerd Nites are organised in pubs, so there is venue rental cost. This time the venue were our living rooms, so pretty much all the money went to a local foodbank.

I had the pleasure of presenting on one of the topics close to my heart (and mind!), which is the potential for AI to dramatically improve anti-money laundering efforts in financial organisations. You can find the complete recording below.

Enjoy!

UCL Digital Ethics Forum: Translating Algorithm Ethics into Engineering Practice

Tue 04-Feb-2020

On Tue 04-Feb-2020 my fellow academics at UCL held a workshop on algorithmic ethics. It was organised by Emre Kazim and Adriano Koshiyama, two incandescently brilliant post-docs from UCL. The broader group is run by Prof. Philip Treleaven, who is a living legend in academic circles and an indefatigable innovator with an entrepreneurial streak.

Algorithmic ethics is a relatively new concept. It’s very similar to AI ethics (a much better-known concept), with the difference being that not all algorithms are AI (meaning that algorithmic ethics is a slightly broader term). Personally, I think that when most academics or practitioners say “algorithmic ethics” they really mean “ethics of complex, networked computer systems”.

The problem with algorithmic ethics doesn’t start with them being ignored. It starts with them being rather difficult to define. Ethics are a bit like art – fairly subjective and challenging to define. Off the top of our heads we can probably think of cases of (hopefully unintentional) discrimination of job applicants on the basis of their gender (Amazon), varying loan and credit card limits offered to men and women within the same householdi (Apple / Goldman), online premium delivery services more likely being offered to white residents than blackii (Amazon again). And then there’s the racist soap dispenseriii (unattributed).

The problem with algorithmic ethics doesn’t start with them being ignored. It starts with them being rather difficult to define. Ethics are a bit like art – fairly subjective and challenging to define. Off the top of our heads we can probably think of cases of (hopefully unintentional) discrimination of job applicants on the basis of their gender (Amazon), varying loan and credit card limits offered to men and women within the same householdi (Apple / Goldman), online premium delivery services more likely being offered to white residents than blackii (Amazon again). And then there’s the racist soap dispenseriii (unattributed).

These examples – deliberately broad, unfortunate and absurd in equal measure – show how easy it is to “weaponise” technology without an explicit intention of doing so (I assume that none of the entities above intentionally designed their algorithms as discriminatory). Most (if not all) of the algorithms above were AI’s which trained themselves off of a vast training dataset, or optimised a business problem without sufficient checks and balances in the system.

With all of the above most of us will just know that they were unethical. But if we were to go from an intuitive to a more explicit understanding of algorithmic ethics, what would it encompass exactly? Rather than try to reinvent the ethics, I will revert to trusted sources: one of them will be Alan Turing Institute’s “understanding artificial intelligence ethics and safety”iv and the other will be a 2019 paper “artificial intelligence: the global landscape of ethics guidelines”v co-authored by Dr. Marcello Ienca from ETH Zurich, whom I had the pleasure of meeting in person at Kinds of Intelligence conference in Cambridge in 2019. The latter is a meta-analysis of 84 AI ethics guidelines published by various governmental, academic, think-tank, or private entities. My pick of the big ticket items would be:

- Equality and fairness (absence of bias and discrimination)

- Accountability

- Transparency and explicability

- Benevolence and safety (safety of operation and of outcomes)

There is an obvious fifth – Privacy – but I have slightly mixed feelings when it comes to throwing it in the mix with the abovementioned considerations. It’s not that privacy doesn’t matter (it matters greatly), but it’s not as unique to AI as the above. Privacy is a universal right and consideration, and doesn’t (in my view) feed and map to AI as directly as, for example, fairness and transparency.

Depending on the context and application, the above will apply in different proportions. Fairness will be critical in employment, provision of credit, or criminal justice, but I won’t really care about it inside a self-driving car (or a self-piloting plane – they’re coming!) – then I will care mostly about my safety. Privacy will be critical in the medical domain, but it will not apply to trading algorithms in finance.

The list above contains (mostly humanistic) concepts and values. The real challenge (in my view) is two-fold:

- Defining them in a more analytical way.

- Subsequently “operationalising” them into real-world applications (both in public and private sectors).

The first speaker of the day, Dr. Luca Oneto from the University of Genoa, presented mostly in reference to point #1 above. He talked about his and his team’s work on formulating fairness in a quantitative manner (basically “an equation for fairness”). While the formula was mathematically a bit above my paygrade, the idea itself was very clear, and I was sold on it instantly. If fairness can be calculated, with all (or as much as possible) ambiguity removed from the process, then the result will not only be objective, but also comparable across different applications. At the same time, it didn’t take long for some doubts to set in (although I’m not sure to what extent they were original – they were heavily inspired with some of the points raised by Prof. Kate Crawford in her Royal Society lecture, which I covered here). In essence, measuring fairness seems do-able when we can clearly define what constitutes a fair outcome – which, in many cases in real life, we cannot. Let’s take two examples close to my heart: fairness in recruitment and the Oscars.

With my first degree being from not-so-highly ranked university, I know for a fact I have been autorejected by several employers – so (un)fairness in recruitment is something I feel strongly about. But let’s assume the rank of one’s university is a decent proxy for their skills, and let’s focus on gender representation. What *should be* the fair representation of women in typically male-dominated environments such as finance or tech? It is well documented that and widely debated as to why women drop out of STEM careers at a high ratevi vii – but they do, around 40% of them. The explanations go from “hegemonic and masculine culture of engineering” to challenges of combining work and childcare disproportionately affecting new mothers. What would be the fair outcome in tech recruitment then? A % representation of women in line with present-day average? A mandatory affirmative action-like quota? (if so, who and how would determine the fairness of the quota?) 50/50 (with a small allowance for non-binary individuals)?

And what about additional attributes of potential (non-explicit) discrimination, such as race or nationality? The 2020 Oscars provided a good case study. There were no females nominated in the Best Director category (a category which historically has been close to 100% male, with exactly one female winner, Kathryn Bigelow for “the hurt locker” and 5 female nominees, and zero black winners and 6 nominees), and only one black person across all the major categories combined (Cynthia Erivo for “Harriet”). Stephen King caused outrage with his tweet about how diversity should be a non-consideration – only quality (he later graciously explained that it was not yet the case todayviii). Then South Korean “parasite” took the Best Picture gong – the first time in the Academy Awards history the top honour went to a foreign language film. My question is: what exactly would be fair at the Oscars? If it was proportional representation, then some 40% of the Oscars should be awarded to Chinese movies, another 40% to Indian ones, with the remainder split among European, British, American, Latin, and other international productions. Would that be fair? Should special quota be saved for the American movies given that the Oscars and the Academy are American institutions? Whose taste are the Oscars meant to represent, and how can we measure the fairness of that representation?

All these thoughts flashed through my mind as I was staring (somewhat blankly, I admit), at Dr. Oneto’s formulae. The formulae are a great idea, but determining the distributions to measure the fairness against… much more of a challenge.

The second speaker, Prof. Yvonne Rogers of UCL, tackled AI transparency and explainability. Prof. Rogers tackled the familiar topics of AI’s being black boxes and the need for explanations in important areas of life (such as recruitment or loan decisions). Her go-to example was AI software scrutinising facial expressions of candidates during recruitment process based on unverified science (as upsetting as that is, it’s nothing compared to fellas at Faception who declare they can identify whether somebody is a terrorist by looking at their face). While my favourite approach towards explainable AI, counterfactuals, was not mentioned explicitly, they were definitely there in spirit. Overall it was a really good presentation on a topic I’m quite familiar with.

The third speaker, Prof. David Barber of UCL, talked about privacy in AI systems. In his talk, he strongly criticised present-day approaches to data handling and ownership (hardly surprisingly…). He presented an up-and-coming concept called “randomised response”. Its aim is described succinctly in his paperix as “to develop a strategy for machine learning driven by the requirement that private data should be shared as little as possible and that no-one can be trusted with an individual’s data, neither a data collector/aggregator, nor the machine learner that tries to fit a model”. It was a presentation I should be interested in – and yet I wasn’t. I think it’s because in my industry (investment management) privacy in AI is less of a concern than it would be in recruitment or medicine. Besides, IBM sold me on homomorphic encryption during their 2019 event, so I was somewhat less interested in a solution that (if I understood correctly) “noisifies” part of personal data in order to make it untraceable, as opposed to homomorphic encryption’s complete, proper encryption.

In the only presentation from the business perspective, Pete Rai from Cisco talked about his company’s experiences with broadly defined digital ethics. It was a very useful counterpoint to at times slightly too philosophical or theoretical academic presentations that preceded it. It was an interesting presentation, but like many others, I’m not sure to what extent it really related to digital ethics or AI ethics – I think it was more about corporate ethics and conduct. It didn’t make the presentation any less interesting, but I think it inadvertently showed how broad and ambiguous area digital ethics can be – it’s very different things to different people, which doesn’t always help push the conversation forward.

The event was part of a series, so it’s quite regrettable I have not heard of it before. But that’s just a London thing – one may put all the work and research to try to stay in the loop of relevant, meaningful, interesting events – and some great events will slip under the radar nonetheless. There are some seriously fuzzy, irrational forces at play here.

Looking forward to the next series!

ii https://arxiv.org/ftp/arxiv/papers/1906/1906.11668.pdf

iii https://www.salon.com/2019/02/19/why-women-are-dropping-out-of-stem-careers/

iv https://www.sciencedaily.com/releases/2018/09/180917082428.htm

v https://ew.com/oscars/2020/01/27/stephen-king-diversity-oscars-washington-post/

vi https://arxiv.org/pdf/2001.04942.pdf

vii https://www.sciencedaily.com/releases/2018/09/180917082428.htm

viii https://ew.com/oscars/2020/01/27/stephen-king-diversity-oscars-washington-post/

Royal Institution “Quantum in the City”

Sat 16-Nov-2019

On Sat 16-Nov-2019 the Royal Institution served its science-hungry patrons a real treat: a half-day quantum technologies showcase titled “Quantum in the City: the shape of things to come”. The overarching concept was to present what living in the “quantum city” of the future might look like.

It was organised with the participation of UK National Quantum Technologies Programme and ran a day after a big industry event at the QE2 Centre.

Weekend events at the RI usually differ from the standard evening lectures in that they are longer and cover one area in more depth. This one was no exception: in addition to a 1.5hr panel discussion, there was an extensive technology showcase across the 1st floor of the RI building, with no fewer than 20 exhibitors, most of them from academia or university spin-off companies.

One of the chapters from Nassim Taleb’s “skin in the game” (full disclosure: I haven’t read the whole book; I only read the abridged chapter when it appeared in my news feed on, all of the places, Facebook[1]) describes a social group he (with his usual Kanye charm) calls “Intellectual Yet Idiot”. I tick pretty much all the boxes in that description (except the “comfort of his suburban home with 2-car garage” – try “precarious comfort of his Qatari-owned 2-bed rental“), but none more than “has mentioned quantum mechanics at least twice in the past five years in conversations that had nothing to do with physics”. Guilty as charged, that’s me. The context in which I mention quantum mechanics, physics, and technologies in conversations is usually the same – I don’t understand them. I understand one or two of the basic concepts, but I still completely don’t get how with each new qubit the computing power of a quantum computer doubles, what quantum (let alone quantum-safe) encryption is, and why the observer makes all the difference (and what does “observer” even mean?! A conscious observer?!).

One of the chapters from Nassim Taleb’s “skin in the game” (full disclosure: I haven’t read the whole book; I only read the abridged chapter when it appeared in my news feed on, all of the places, Facebook[1]) describes a social group he (with his usual Kanye charm) calls “Intellectual Yet Idiot”. I tick pretty much all the boxes in that description (except the “comfort of his suburban home with 2-car garage” – try “precarious comfort of his Qatari-owned 2-bed rental“), but none more than “has mentioned quantum mechanics at least twice in the past five years in conversations that had nothing to do with physics”. Guilty as charged, that’s me. The context in which I mention quantum mechanics, physics, and technologies in conversations is usually the same – I don’t understand them. I understand one or two of the basic concepts, but I still completely don’t get how with each new qubit the computing power of a quantum computer doubles, what quantum (let alone quantum-safe) encryption is, and why the observer makes all the difference (and what does “observer” even mean?! A conscious observer?!).

Consequently, I keep going to different quantum lectures and presentations, in order to actually understand what this stuff’s about. I basically hope that if I hear it for the n-th time, something in my brain will click. It was that hope that sent me to the RI in November of 2019. Plus, I was really keen to see practical applications of quantum technology.

The discussion panel was great. The panellists were:

- Miles Padgett, Principal Investigator for the QuantIC Hub

- Kai Bongs, Director, UK Quantum Technology Hub for Sensors and Metrology (I previously attended Kai’s presentation on quantum sensors at New Scientist Live)

- Dominic O’Brien, Co-Director, NQIT (UK Quantum Technology Hub for Networked Quantum Information Technologies)

- Tim Spiller, Director, UK Quantum Technology Hub for Quantum Communications Technologies

The discussion revolved around current and future applications of quantum technologies. Like everyone, I know of quantum computers (I even saw IBM’s one during their Think!2019 event), and quantum encryption. I have a basic awareness of quantum sensors (from Kai’s talk at NS Live in 2019 or 2018) and some ambitious plans for quantum technologies-based medical imaging (“quantum doppelgangers” if I recall correctly… I heard of those during Science Museum Lates even on quantum). Paul Davies mentioned quantum biology in his own RI lecture “what is life”, as did Prof. Jim al-Khalili in some interview – but that’s about it.

Fundamentally though, my understanding was that quantum technologies are only beginning to emerge in academic and / or industrial settings. It was genuine news to me that existing technologies (chief among them semiconductors and transistors, which is basically all of modern technology and the Internet; also lasers and MRI scanners) are reliant on the effects of quantum mechanics and are referred to as “quantum 1.0”. The cutting-edge technologies emerging these days are “quantum 2.0”.

Imaging was a prominent use case for quantum technologies, across a number of fields: medical (endoscopy, brain imaging for dementia research), environmental, construction (what’s underneath the soil), industrial (seeing through dirty water or unclear air).

Quantum computing and encryption were also discussed at length. With quantum computing, we’re on the cusp of doing practically useful things at a much lower (energy and time) cost than traditional computing. (nb. the Google experiment was a test problem, not a real problem). In some use cases, quantum computing may be orders of magnitude cheaper in terms of energy consumption compared to conventional computing. In some other use cases this saving will be minimal (interesting comment – I assumed that quantum computers would generate orders-of-magnitude energy and time savings across the board). In terms of encryption, the experts at the RI repeated almost verbatim what Ian Levy from NCSC / GCHQ said at quantum computing panel at the Science Museum a few weeks prior: currently all our communications are encrypted and therefore assumed more or less safe. However, it is theoretically possible for an actor to store encrypted communications of today and decrypt them using quantum technology in the future. Work is underway to develop mathematical models for quantum-safe encryption.

There is work starting on standardization of quantum technologies to ensure their portability.

The panellists also discussed at length the research and investment landscape of quantum technologies across the UK. They noted that the UK was the first country in the world to come up with a national programme of academic + industry partnership and funding in quantum technology research. The US and their programme have (allegedly!) pretty much copied the British blueprint. To date, all distributed and committed funds are close to GBP 1bn. That’s a decent level of funding, but in part, because different groups and laboratories have been set up and funded through different sources before. If the GBP 1bn funding was to fund everything from scratch, then it might not be sufficient. Currently, a substantial part of UK quantum research funding (varies by group and programme) comes from the EU. Brexit is an obvious concern.

Separately, there is an acute talent shortage in engineering in general, and even more so in quantum technology. Big tech companies are in a strong position to compete for talent because they can offer great salaries and interesting careers.

Speaking of quantum talent, their rooms of the RI were filled with the country’s (and likely the world’s) best and brightest in the field. 20 exhibitors presented their projects, all of which were applications-based rather than pure research. Some of those were proofs of concept (PoC’s), some were prototypes, and some were in between. A handful of exhibitors stood out based on my subjective and oft-biased judgement:

- Underwater 3D imaging, ultra-thin endoscope, and a camera looking around corners (all from QuantIC: UK tech hub for quantum enhanced imaging) were all practical examples of advanced imaging applications.

- Trapped Ion Quantum Computer (University of Sussex). The technological details are a little above my paygrade, but apparently different engineering approaches towards quantum computing lend themselves differently to scaling. The researchers in Sussex use microwave technology, which differs from existing mainstream approaches and can be quite promising. I have had a soft spot and very high regard for the Sussex lab ever since I met its head, the fabulously brilliant and eccentric Winfried Hensinger when he presented at one of New Scientist Instant Expert events.

- Quantum Money (University of Cambridge) was the only project related to my line of work and a slightly exotic one even in the weird and wonderful world of quantum technologies. S-Money, as it’s called, is at the intersection of quantum theory and theory of relativity, and could enable unhackable identification as well as lag-free transacting – on Earth and beyond. And they say the finance industry lacks vision…

In summary, the RI event was nothing short of awesome. I don’t know whether I got anywhere beyond the “Intellectual yet Idiot” point on the scale of quantum expertise, but I can live with that. I learned of new applications of quantum technologies, and I met some incandescently brilliant people; couldn’t really ask for much more.

[1] Fuller disclosure: I only ever read Nassim’s „black swan”, and I consider it to be a genuinely great book. I bought “fooled by randomness” and “antifragile” with an intention of reading them some day (meaning never). Still, if I mention the titles with sufficient conviction, most people usually assume I read those end-to-end. I don’t correct them.

London Business School Energy Club presents: the renewables revolution

Thu 26-Sep-2019

As an LBS alumn (or is it “alumnus”? I never know…) I am a part of a very busy e-mail distribution list, connecting tens of thousands of LBS grads worldwide. LBS, its clubs, alumni networks etc. regularly organise different events, and I make an active effort to attend one at least every couple of months. I went to “the business of sustainability” a couple of months ago, so the upcoming “the renewables revolution” organised by LBS Energy Club (and sponsored by PWC) was an easy choice.

Renewable energy is not a controversial topic in its own right (unless you’re a climate change denier or a part of the fossil fuel lobby, especially on the coal side). It’s a controversial topic along the lines of disruption of powerful, established, entrenched industries (mostly mining and petrochemicals) and also along the lines of disruption of life(style) as we know it. Most of us in the West (the proverbial First World, even if it doesn’t feel like one very often) want to live green, sustainable, environmentally-friendly lifestyles… as long as the toughest environmental sacrifice is ditching a BMW / Merc / Lexus etc. for a Tesla, and swapping paper tissues for bamboo-based ones (obviously I am projecting here, but I don’t think I’m that far off the mark). Us Westerners (if not “we mankind”, quoting Taryn Manning’s character from “hustle and flow”) love to consume, love the ever-expanding choices, love all the conveniences we can afford – the prospect of cutting down on hot water, not being able to go on overseas holidays once or twice a year, or not replacing our mobiles whenever we feel like it, is an unpleasant one. Renewables, with their dependency on weather (wind, solar) and generally less abundant (or at least less easily and immediately abundant) output are an unpleasant reminder that the time of abundance (when, quoting Michael Caine’s character from “Interstellar”, “every day felt like Christmas”) might be coming to an end.

Furthermore, even for a vaguely educated Westerner like myself, renewables are a source of certain cognitive dissonance. On one hand we have several consecutive hottest years on record, floods, wildfires, disrupted weather patterns, environmental migrants, the prospect of ice-free Arctic ocean, Extinction Rebellion etc. – on the other hand we have seemingly very upbeat news like “Britain goes week without coal power for first time since industrial revolution”, “Fossil fuels produce less than half of UK electricity for first time”, or “Renewable electricity overtakes fossil fuels in the UK for first time”. So in the end, I don’t know whether we’re turning the corner as we speak, or not.

There is no shortage of credible statistics out there – it’s quite a challenge for a non-energy expert to understand them. According to BP, renewables (i.e. solar, wind and other renewables) accounted for approx. 9.3% of global electricity generation in 2018 (25% if we add hydroelectric). Then, as per the World Bank (spreadsheets with underlying data from Renewable Energy), in 2016 all renewables accounted for approx. 11% of global energy generation (35% if we add hydroelectric). Then, as per IEA, in 2018 renewables accounted for measly 2% of total energy production (rising to 12% if we add biomass and waste, and to 15% if we add hydro).

2% looks tragic, 9.3% looks poor, 25% or 35% looks at least vaguely promising – but no matter which set of stats we choose, fossil fuels still account for vast majority of global energy generation (and the demand is constantly rising). Consequently, my anxiety remains well justified. It was the reason I went to the event in the first place – to find out what the future holds.

The panellists were:

- Equinor, Head of Corporate Financing & Analysis, Anca Jalba

- Glennmont Partners, Founding Partner, Scott Lawrence

- Globeleq, Head of Renewables, Paolo de Michelis

- Camco, Managing Director, Geoff Sinclair

The panellists made a wide range of observations, depending on their diverse geographical focus and nature of their companies. You will find a summary below, coupled with my personal observations and comments. I intentionally anonymized the speakers’ comments.

One of the panellists remarked that in the last decade a cost of 1MW of solar panels went from EUR 6-8m to EUR 3.5m to EUR 240k, and at the same time ESG went from being a niche area in investment management to being very much at the core (I echo the latter from my own observations). At the same time, according to research, in order to meet Paris Accord targets, by 2050 50% of global energy will need to come from renewables. So no matter which set of abovementioned statistics we choose, we’re globally nowhere near 50%.

The above comments are probably fairly well known, sort of goes without saying. However, the speakers made a whole lot of more targeted observations.

The concept of distributed renewables (individual households generating their own electricity, mostly using solar panels on their roofs, and feeding surplus into the power grid) was mentioned. This is being encouraged by some governments, and the speakers noted that governments are the key players in reshaping the energy landscape. They were also quite candid on there being a lot of rent seeking behaviour in the (established) energy sector (esp. utility companies). Given the size and influence of the utility sector, it is fairly understandable that they may have mixed feelings towards activities that may effectively undercut them. At the same time, one would hope that at least some of them see the changes coming, and appreciate their necessity and inevitability by adapting rather than opposing. Interestingly, emerging markets where energy infrastructure and power generation are not very reliable were mentioned as an opportunity for off-grid renewables.

We were also reminded that electricity generation is just part of the energy mix. It’s a massive part, of course, but there is also automotive transport, aviation, and shipping – all of which consume vast amounts of energy, with very few low-carbon or no-carbon options. Electric vehicles are a promising start (not without their own issues though: cobalt mining), but aviation and shipping do not currently have viable non-fossil-fuel-based options (except perhaps biofuels, but I doubt there is enough arable land in the whole world to plant enough biofuel-generating crops to feed the demands of aviation and shipping).

The need for (truly) global carbon tax was also raised. I think (using tax havens as reference) it may be challenging to implement, but, unlike corporate domicile and taxation, energy generation is generally local, so if governments would tax emissions physically produced by utility companies within their borders, that could be more feasible. Then again, it could be quite disruptive and thus challenging politically (think the fight around coal mining in the US or gillet jeunes in France as examples).

On the technical side, intermittency risk is a big factor in renewables, and energy storage is not there yet on an industrial scale. It is a huge investment opportunity.

In terms of new sources of renewable energy, floating offshore wind farms were mentioned as the potential next big thing, even though it is currently not commercially viable. My question about the panellists’ views on feasibility of fusion power was met with scepticism.

In terms of investment opportunities, one of the speakers (prompted by my question) mentioned that climate change adaptation is also one. This echoes exactly what Mariana Mazzucato said at the British Library event some time ago (pls see my post “Mariana Mazzucato: the value of everything” for reference), so there might be something there. More broadly, there seemed to be a consensus among the speakers once subsidies disappear, only investors will large balance sheets and portfolios of projects will be in the position to compete, given capital-intensive nature of energy infrastructure.

I ended by asking a question about the inevitability and scale of impact of the climate change on the world as we know it and on our lifestyles. I didn’t get a very concrete reply other than there *will be* impact, and adaptation will be essential. It hasn’t lifted my spirit, but I don’t think I was expecting a different answer. In the end, it looks like the renewables are currently more of an evolution than revolution. Evolution is better than nothing; it might just not be enough.

The Alan Turing Institute presents Lilian Edwards “regulating unreality”

11-Jul-2019

On Thursday 11-Jul-2019 the Alan Turing Institute served up a real treat in the form of lecture by Professor Lilian Edwards1. To paraphrase Sonny Bono, Lilian Edwards is not just a professor, she’s an experience. Besides being a prolific scholar at the bleeding edge of law and regulation, she is one of the most engaging and charismatic speakers I have ever met. I first heard Prof. Edwards present at one of the New Scientist Instant Expert event series (of which I’m a big fan btw), and I have been her fan ever since.

After hearing a comprehensive and at times provocative lecture on legal and social aspects of AI and robotics (twice!) in 2017, in 2019 Prof. Edwards focused on something even more cutting edge: social and legal aspects of deepfakes.

Delivered in the stunning Brutalist surroundings of the Barbican and hosted by Professor Adrian Weller, the lecture started with revisiting the first well-known deepfake: Gal Gadot’s face photorealistically composited onto a body of an adult film actress in a 2017 gif from Reddit.

Delivered in the stunning Brutalist surroundings of the Barbican and hosted by Professor Adrian Weller, the lecture started with revisiting the first well-known deepfake: Gal Gadot’s face photorealistically composited onto a body of an adult film actress in a 2017 gif from Reddit.

Most things on the Internet start with (or end, or lead to) porn – it’s simply the way it is. However, developing technologies which allow access to, streaming, or sharing of images of real consenting adults engaging in enjoyable, consensual activities is one thing (the keyword being in my personal opinion “consent”) – deepfaking explicit images of celebrities or anyone else is vulgar and invasive (try to imagine photos of your mom, daughter, or even yourself being digitally, photorealistically “pornified”, and then think how it would make you feel). As awful as that is, deployment of deepfake technology in politics is something else entirely. Deepfakes are likely the new fake news, albeit taken to a new level: a seamless audio-visual distortion of reality.

Prof. Edwards reminded everyone that image manipulation was deployed in politics pre-deepfake era – using very simple techniques with often very successful effects: the Nancy Pelosi video slowed down in order to give appearance of her slurring and seemingly drunk and the White House video of CNN reporter Jim Acosta. Furthermore, she pointed out that deepfakes are not necessarily the end of the road: they are just one (disturbing) element of seamlessly generated/falsified “synthetic reality”.

Among the many threats (“use cases”) caused by deepfakes, the one that resonated with me the strongest were:

- Deepfakes are not just about presenting something fake as something real, they’re also about discrediting something real as fake.

- Plenty of potential uses in both civil and criminal smears.

- Deepfakes create plausible deniability for anything and everything.

Moving on to legal and social considerations, Prof. Edwards looked at plethora of existing and proposed legal and technological solutions across the US, UK, and EU, expertly pointing out their shortcomings and/or unfeasibility. What moved me the most was the similarity (at least in terms of underlying concept and intent) between deepfakes and revenge porn, which I find absolutely and utterly disgusting. Another consideration was the question of one, objective, canonical reality (particularly online): while it wasn’t raised in great detail (we didn’t take the quantum physics route), it that resonated strongly with me. Lastly (in true Lilian Edwards cutting edge/provocative thinking style) there was a question whether reality should be considered a human right.

In terms of big, open questions, I think 2 stands out particularly prominently:

- What should be the strategy: ex ante prevention or post factum sanctions?

- Whom to prosecute: the maker, the distributor, the social media platform, the search engine?

Overall, it was a fantastic, thoroughly researched and brilliantly delivered lecture (and at GBP 4.60 it wasn’t just the hottest ticket in town, but very likely the cheapest one too). You can watch the complete recording on Turing’s YouTube channel. You can hear me around the 01:10:00 mark, raising my concerns about deepfake-driven detachment from reality among younger generations.

[1] www.turing.ac.uk/events/turing-lecture-regulating-unreality

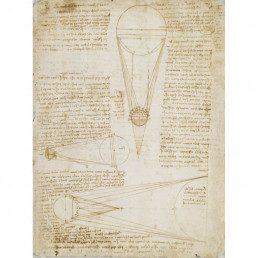

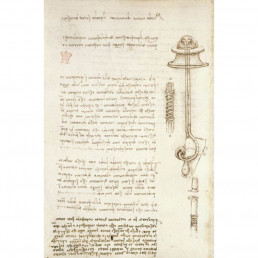

Leonardo at the British Library

11-Aug-2019

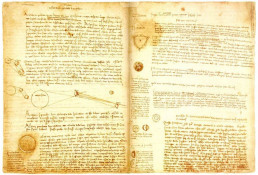

A museum exhibition dating back half of a millennium may not immediately seem like an organic fit in a cutting-edge finance and technology blog. However, this isn’t just any exhibition we’re talking about – this is a Leonardo da Vinci exhibition at the British Library. The quality and quantity of scientific and academic content of the works on display were orders of magnitude greater than any technology discussed throughout the rest of my blog.

With 3 codices for the first time on display together, the exhibition makes for a riveting experience (and also a chilling one – the exhibition room is kept at 17 Centigrade in order to preserve the manuscripts). Each and every one of the pages on display (“codex” is really just a fancy term for “notebook”) presents an insight or thought that would make worthy life’s work for most non-genius folk.

Facing Leonardo’s work is an experience comparable to seeing “2001: a space odyssey” for the first time – it’s not exactly religious, not really spiritual (I don’t like this word anyway), but transcendental still. There is, of course, a huge degree of subjectivity (my mom was not exactly riveted by “2001:…”), but facing this calibre of genius up close was deeply moving. Right before my eyes, separated from me only with a thin sheet of glass, were the notes of one of the finest polymathic minds in the history of mankind.

The exhibition showcases 3 of Leonardo’s codices (out of a total of 11 his works were broken into):

- Codex Arundel: 283 sheets of notes on various subjects, including mechanics and geometry (from British Library’s own collection)

- Codex Forster: notes on a variety of scientific topics including hydraulics, weights, and geometry (from the V&A Museum in London)

- Codex Leicester: 36 sheets on a variety of topics including astronomy, geology, and the flow of water (one of the topics Leonardo seemed to have a particular fascination with). Codex Leicester is a property of Bill Gates, who purchased it in 1994 for USD 30.8 mln* (so close to a million per sheet) and allows it to be exhibited worldwide.

Leonardo’s famous mirror writing just adds to his mystique – what made him write this way? Is it just because he was left-handed? (seems a bit contrived an explanation). Fun fact: the multiplication table (up to 10×10) on one of the sheets is written left-to-right.

Even on a purely aesthetic level, Leonardo’s work is a delight. The beige sheets – almost all of them richly illustrated – have artistic values. The drawings may not all be quite Salvator Mundi-quality, but they are spectacular nonetheless. Even the handwriting itself is stylish; I mean, just look:

The realisation that Leonardo made these notes and drawings himself was pretty mindblowing in its own right. It’s almost like Leonardo was there himself.

The stylish-to-a-fault exhibition** doesn’t even present the complete contents of the 3 codices – just selected sheets, and many of these sheets contain enough to be an accomplished Renaissance academic’s life’s work. So that’s just a fraction of his scientific work, all of which is on top of 2*** of the world’s most famous paintings: the Mona Lisa and the Last Supper. To me that realisation – coming up close with it – was the most moving and transfixing experience of the entire exhibition: how did he do it? Was Leonardo just some sort of statistical inevitability (out of large enough population a supergenius polymath of his calibre simply *had to* emerge)? What did it feel like to be him? Was famously private Leonardo aware of the extent of his own genius and talent?

All these questions (and many more) were tackled by Oxford University’s Professor Martin Kemp during an accompanying event on Tue 06-Aug-2019. In an interdisciplinary talk (which lasted well over an hour but felt like a moment) he showed the audiences how to find beauty in Leonardo’s scientific work and how to find scientific accuracy in Leonardo’s art.

Prof. Kemp said that Leonardo exhibited “almost pathological attention to detail” – something I’m a big fan of myself. He also mentioned that everything Leonardo ever did (though I’m unsure whether that included paintings) was an act of analysis – “how does the mechanism work?” was apparently one of the questions driving Leonardo throughout his life and work. Professor Kemp added that Leonardo admired the perfection of the structure of things and believed that a great design had an element of inevitability; consequently, Leonardo was much more of a geometric rather than an arithmetic mind. He set himself impossible, “unfinishable” tasks (something I can relate to), was famously private (something I can’t relate to at all), but he did apparently like dirty humour (something I can relate to).

Prof. Kemp mentioned that for Leonardo science and fantasy went hand in hand. It wasn’t perhaps entirely uncommon at the time (though it would more likely have been science and religion and/or theology), but still, it seems just so naturally and organically fitting to his work, where groundbreaking science and art worked hand-in-hand.

Fun fact: Prof. Kemp is of a certain age (77 years young to be exact), yet when he was on stage discussing Leonardo, he was literally beaming with the enthusiasm and energy of someone much younger. It was quite incredible. I hope that I will one day find that one thing that will give me as much joy and fulfilment.

* Normally, I’m rather unimpressed with 8- or 9-figure sums paid for works of art (it’s pure excess to me), but I have to say, for Leonardo’s codex I’m willing to make an exception: that was money well spent.

** Fittingly, the exhibition is sponsored and styled by Pininfarina, the Italian automotive design house behind some of the world’s most stylish sports cars.

***3 If we count Salvator Mundi.

Polish academic community releases Manifesto for Polish AI

19-May-2019

The big press release of the season (possibly of the year as well, even though we’re less than halfway through) is without a doubt the European Commission’s Ethical Guidelines for Trustworthy AI1 (published in Apr-2019). Most developed states have their own AI strategies. That list includes my home country, Poland, which released an extensive AI strategy document in Nov-2018. The document (which I may review separately on another occasion) is not strategy proper, it’s more of a summary of key considerations in formulating a full-blown strategy.

It is likely that the lack of an explicit strategy (and lack of explicit commitment to funding) led Polish academic community to publish their own Manifesto for Polish AI.

Poland may not seem like an obvious location to launch an AI (or any other tech) startup, but anecdotal evidence states to the contrary. There are pools of funds (including state subsidies and grants) available to tech entrepreneurs and a relatively low number of entrepreneurs competing for those funds. The most popular explanations for this are the state’s attempts to stimulate digital economy and to stem (maybe even reverse) the pervasive brain drain, which started when Poland joined the EU in 2004.

Entrepreneurship is one thing, but research is something different altogether – at least in Poland. In the absence of home-grown innovation leaders the like of Amazon or Deep Mind, research is almost entirely confined to academic institutions. Reliant entirely on state funding, Polish academia has always been underfunded: in 2018 Polish GERD (gross domestic expenditure on research and development) was 1.03%, compared to EU’s average of 2.07%2 3. In all fairness, the growth of GERD in Poland has been rapid (from 0.56% in 2007) compared to the EU (1.77% in 2007), but the current expenditure is still barely a half of EU’s average (not to mention global outliers: Israel and South Korea both 4.2%, Sweden 3.25%, Japan 3.14%4).

Between flourishing tech entrepreneurship (18 companies on Deloitte’s FAST 50 Central and Eastern Europe list are Polish5 – though, arguably, none of them are cutting-edge technology powerhouses like Dark Trace or Onfido, both 2018 laureates from the FAST 50 UK list6), widely respected tech talent, nascent AI startup scene (deepsense.ai, sigmoidal, growbots) Polish academics clearly felt a little left out – or wanted to make sure they won’t be.

Consequently, in early 2019 Polish academics from Poznan University of Technology’s Institute of Computing Science published Manifesto for Polish AI7, which has since been signed by over 300 leading academics, researchers, and business leaders.

The manifesto is compact. Below is an abridged summary:

Considering that:

- AI innovations can yield particularly high economic returns, as evidenced by the fact that AI is currently a primary focus of Venture Capital firms.

- The significance of AI for economic growth, social development, and defence is recognised by world leaders.

- Innovative economies worldwide are based on strong commitment to science and tertiary education. The world’s most innovative countries such as South Korea, Israel, Scandinavia, USA, and increasingly China happen to also contribute the highest proportion of their GDP’s to R&D and education.

- Polish academia has significant potential in the field of AI.

- A barrier for growth of innovative start-ups in Poland is not just lack of capital, but also, if not mainly, too low a number of start-ups themselves

- Innovative start-ups worldwide are developed mostly within academic ecosystems.

- There is an insufficient number of IT specialists in Poland, and even more so of AI specialists.

We are calling on the decision makers to develop a strategy for growth of key branches of R&D and tertiary education (in particular AI), and to take decisive actions to fulfil that strategy:

- Severalfold increase in expenditure on primary research and implementation of AI to reach parity with the most innovative countries.

- Growth and integration of R&D teams working in the field of AI and improvement of their collaboration with the industry.

- Ensuring PhD’s and other academics doing AI research receive grants and remuneration comparable to those in the (AI) industry.

- Additional funding for opening new AI university courses and broadening the intake into the existing ones.

- Funding for entrepreneurship centres (based on the Martin Trust Center for MIT Entrepreneurship) on Polish university campuses, offering students and employees entrepreneurship education, seed funding, co-working spaces etc.

Funds committed to the above will be a great investment, which will pay for itself many times over in the form of greater innovation, a higher number of AI experts on the job market, and in effect faster economic and social growth, and better defence.

There is nothing controversial in the manifesto – in fact, probably most academics worldwide would sign a copy with “Poland” being replaced with their respective country name. It may be a little idealistic/ unrealistic (reaching R&D parity as % of GDP with global leaders is… ambitious), but that doesn’t diminish its merit. I for one would be ecstatic to see Poland committing 3% or 4% of GDP to R&D. Separately, it’s really nice to see that a compelling argument can be presented on literally 1 page. Less is almost always more.

1 https://ec.europa.eu/futurium/en/ai-alliance-consultation/guidelines

2 https://www.gov.pl/web/przedsiebiorczosc-technologia/systematycznie-nadrabiamy-dystans-pod-wzgledem-nakladow-na-badania-i-rozwoj

3 To further justify the “always” in “always underfunded”: Poland’s GERD average from 1996 to 2016 was 0.69%; EU’s was 1.84% (World Bank, https://data.worldbank.org/indicator/gb.xpd.rsdv.gd.zs)

4 https://data.worldbank.org/indicator/gb.xpd.rsdv.gd.zs – World Bank data for 2016 (most recent complete year’s data available for all countries).

5 https://www2.deloitte.com/content/dam/Deloitte/ce/Documents/about-deloitte/ce-technology-fast-50-2018-report.pdf

6 https://www.deloitte.co.uk/fast50/winners/2018/

7 https://manifestai.cs.put.poznan.pl/?fbclid=IwAR0-fp7VsRf-QHSr_SCFrqIj3P9BNcOCTz_vcEysUv_p3Bkv1XK2UI0kZp4

Natural Language Generation (NLG) is coming to asset management

Sun 17-Mar-2019

Natural Language Processing (NLP) is a domain of artificial intelligence (AI) focused on, well, processing normal, everyday language (written or spoken). It is used by digital assistants such as Siri or Google, smart speakers such as Google Home or Alexa, and countless chatbots and helplines all around the world (“in your own words, please state the reason for your call…”). The idea is to simplify and humanize human-computer interaction, making it more natural and free-flowing. It is also meant to generate substantial operational efficiencies for service providers, allowing their AI’s provide services that were either previously unavailable (human-powered equivalent of Siri – not an option), or costly (human-powered chats and helplines).

Natural Language Generation (NLG) is an up-and-coming twin of NLP. Again, the name is rather self-explanatory – NLG is all about AI generating text indistinguishable from what could be written by a human author. It has been slowly (and somewhat discreetly) taking off in journalism for a couple of years now.1 2 3

NLG is far less known and less deployed in financial services (and otherwise), but given potential for operational efficiencies (AI can instantly, and close to zero cost produce text which would otherwise take humans much more time to prepare, and at a non-negligible cost) it makes an instant and strong business case. There are areas within asset management whose primary (if not sole) purpose is the preparation of standardised reports and summaries: attribution reports, performance reports, risk reports, or periodical fund/market updates. Some of these are so rote and rules-based that they make natural candidates for automation (attribution, performance, perhaps risk). Fund updates and alike are much more open and free-flowing, but still, they are rules- and template-driven.

AI replacing humans is an obvious recipe for controversy, but perhaps it is not the right framing of the situation: rather than consider AI as a *replacement*, perhaps it would be much better for everyone to consider it a *complement* or even more simply: a tool. You will still need an analyst to review those attribution reports and check the figures, and you will still need an analyst to review those fund updates. And with the time saved on compiling the report, the analyst can move on to doing something more analytical, productive, and value-adding. At least that’s the idea (QuantumBlack, an analytics consultancy and part of McKinsey, calls this “augmented intelligence” and did some research in this field which they shared during a Royal Institution event in 2018. You can watch the recording of the entire event here – the key slide is at 16:44. There is some additional reading on Medium here and here).

Some early adoption stories begin to pop up in the media: SocGen and Schroders (who, with their start-up hub, are quite proactive in terms of being close to the cutting edge of tech in investment management) are implementing systems for writing automated portfolio commentaries4. No doubt there will be more.

Disclaimer: this post was written by a human.

1 https://www.fastcompany.com/40554112/this-news-site-claims-its-ai-writes-unbiased-articles

2 https://www.wired.co.uk/article/reuters-artificial-intelligence-journalism-newsroom-ai-lynx-insight

3 https://www.wired.com/2017/02/robots-wrote-this-story/

4 https://www.finextra.com/pressarticle/75910/socgen-to-use-addventa-ai-for-portfolio-commentary