Do financial services firms need an AI Ethics function?

Do financial services firms need an AI Ethics function?

AI has generated a tremendous amount of interest in the financial services (FS) industry in recent years, even though it had its peaks (2018 – 2019) and throughs (2020 – mid-2022, when the focus and / or hype cycle was on crypto, operational resilience or SPACs) during that relatively short period.

The multi-billion-dollar question about actual investment and adoption levels (particularly on the asset management side) remains open and some anecdotal evidence may lead us to believe that they may be lower than the surrounding hype would have us believe (I covered that to some extent in this post). However, the spectacular developments in generative AI dating back to late-2022 (even though decent quality natural text and image [deepfake] generating systems started to emerge around 2019) indicate that “this time is [really] different”, because both the interest and the perceived commercial opportunities are just too great to ignore.

However, as is being gradually recognised by the industry, there is more to AI than just technology. There are existing and upcoming hard and soft laws. There are cultural implications. There are risks to be taken into account and managed. There are reputational considerations. Lastly, there are ethics.

AI ethics experienced its “golden era” around 2015 – 2018, when just about every influential think tank, international agency, tech firm, government etc. released their takes on the topic, usually expressed as sets of broad, top-level principles which were benevolent and well-meaning on one hand, but not necessarily “operationalizable” on the other.

The arrival of generative AI underscored the validity of AI ethics; or at least underscored the validity of resuming a serious conversation about AI ethics in the business context.

Interestingly, AI ethics per se have never been particularly controversial. It was probably because the “mass-produced” ethical guidances and principles were so universal that it would be really difficult to challenge them – alternative explanation could be that they were seen as more academic than practical and thus were discounted by the industry as a moot point; or both. Hopefully in 2023, with generative AI acting as the catalyst, there is a consensus in the business world that AI ethics are valid and important and need to be implemented as part of the AI governance framework. The question then is: is a dedicated AI Ethics function needed? I think we can all agree that there is a growing number of ethical implications of AI within the FS industry: all aspects of bias and discrimination, representativeness, diversity and inclusion, transparency, treating customers fairly and other aspects of fairness, potential for abuse and manipulation – and all of those considerations have existed pre-generative AI. Generative AI amplifies or adds its own flavour to existing concerns, including trustworthiness (hallucinations), discriminatory pricing, value alignment or unemployment. It also substantially widens the grey area at the intersection of laws, regulations and ethics such personal and non-personal data protection, IP and copyright, explainability or reputational concerns.

The number of possible approaches seems limited:

- Disregard ethics and risk-accept it;

- Expand an existing function to cover AI ethics;

- Create a dedicated AI ethics function.

Risk-acceptance is technically possible, but with the number of things that could potentially go wrong with poorly governed AI and their reputational impacts on the organization, the risk / return trade-off does not appear very attractive.

The latter two options are similar, with the difference being the “FTE-ness” of the ethics function. Creating a new role allows more flexibility in terms of where that new role would sit in the organisational structure; on the other hand, at the current stage of AI adoption there may not be enough AI ethics work to fill a full-time role. Consequently, I think that adding AI ethics to the scope of an existing role is more likely than a creation of a dedicated role.

In either case the number of areas where AI ethics could sit appears to be relatively limited: Compliance, Risk or Tech; ESG could be an option too. A completely independent function would have certain advantages too, but it seems logistically unfeasible, especially in the long run.

Based on first-hand observations I think that the early days of “operationalizing” AI ethics within the financial services context will likely be somewhat… awkward. To what extent ethics are a part of the FS ethos is a conversation for someone else to have; I have seen and experienced organisations that were ethical and decent and others that were somewhat agnostic, so my personal experience is unexpectedly positive in that regard, but I might just have been lucky. I think that ESG is the first instance of the industry explicitly deciding to voluntarily make some ethical choices, and I think that ESG adoption may be a blueprint for AI ethics (I am also old enough to remember early days of ESG, and those sure did feel awkward).

While I have always been convinced of validity of operationalised AI ethics, I think that until very recently I had the minority opinion. It is only very recently that perceptions seem to be changing, driven by spectacular developments in generative AI and the ethical concerns accompanying them. Currently (mid-2023) we are in a fascinating place: AI has been developing rapidly for close to a decade now, but the recent developments have been unprecedented even by AI standards. Businesses find themselves “outrun” by the technology, and, in a bit of a panic knee-jerk reaction (and in part driven by genuine interest and enthusiasm, which currently abound) they become much keener and open-minded adopters than only a year or two ago. Now may be the time when the conversation on AI ethics reaches the point of operational readiness… or maybe not yet; time will tell. Sooner or later, mass implementation and operationalization of AI ethics will happen though. It must.

Is the value of the CFA designation what it used to be?

Is the value of the CFA designation what it used to be?

When I was a fresh grad (i.e., in 2010’s), working in my first financial services job (Bloomberg), CFA designation was really *something*. It was the “s***”. The way Master’s has more or less become the new Bachelor’s (or so my generation managed to convince ourselves), CFA became *the* qualification to really stand out and distinguish oneself among the crowd of ambitious, driven, predominantly Russell Group-educated peers.

I was pleased with myself and my Master’s in Finance from London Business School for about one afternoon, until my Bloomberg colleague (who shall remain nameless) asked me only half-jokingly “so are you going to do CFA now?”; and of course, that’s exactly what I did.

CFA then…

I was never much of a social butterfly, so the lame-but-true “cancel on friends again” definition of CFA prep process didn’t entirely apply to me, but I have to say compared to studying for Master’s studying for CFA was another level. Studying for Master’s or an MBA the general principle is “if you do the work, you *will* pass”; sometimes clearing the admission hurdles (GMAT, resume screening, interviews etc.) felt more challenging than the actual degree course. With CFA “the default is pass” rule simply did not apply. In fact, it was the exact opposite: in order to maintain the exclusivity and value of the qualification, the CFA Institute had every incentive to make fail a default; and it had. Back in my days the pass rate for Levels 1 and 2 was below 50%, which meant that the average candidate did indeed fail the exam; Level 3 had a slightly higher pass rate at around 50%.

The exams themselves (particularly Levels 1 and 2) were completely clinical. It did not matter who you were, who your parents were, where you studied, or even how you arrived at the answer. The answer was all it mattered, and seeing that it was a multiple-choice exam, there was no room for ambiguity either; you either got the right answer or not. The exams were arguably quite reductive in completely ignoring the reasoning and the thought process, but on the other hand they were the most meritocratic exams I had ever taken (save GMAT). They were clinical, sterile, anonymised with a hint of cruel; they cost me three years of my life; I loved them.

Once completed, I wasted no time updating my resume and expected my value in the professional / financial services job market to go up a significant notch. I can’t say for sure, but I think this is what more or less happened. Until…

…and now

Fast forward to 2023 and things feel quite different. Back in my days the exams used to be taken end masse, in a group setting, once a year on a first Saturday of June; the extreme intensity of the examination experience was considered to be very much a part of the package; a feature, not a bug. Back then it wasn’t just about *knowing* how to answer the questions, but also about *taking* and *passing* the exam in the sort of mid-19th century Prussia setting; the intellect counted at par with a tough stomach. The limited supply of exam dates (twice per year for Level 1 and once a year for Levels 2 and 3) helped maintain the value and exclusivity of the qualification; and the pain of failing a level (especially Levels 2 or 3) was truly… soul-crushing.

Nowadays there are *four* exam date options for Level 1, three for Level 2 and two for Level 3. It does, at least theoretically, reduce the minimum period of time required to complete the qualification, but the real crux of it is that the hugely increased supply of exam dates instantly dilutes the exclusivity of the qualification, because there is much lower cost (in terms of stress, effort, time) to retake it, and then retake it again if need be.

Furthermore, the exams are no longer real-time, all-in-one group sessions; they are administered at the computerised test centres. I appreciate that the pandemic forced the CFA Institute to get creative during two years of lockdowns and I don’t begrudge that, but I’m not sold on keeping this as a permanent solution. If anything, it feels like a convenient way for the CFA Institute to rid itself of organising costly and logistically demanding “physical” exam sessions in favour of outsourced test centres. I took GMAT at a computerised test centre and I took the CFA (all three levels) at a physical one; both the exams were super-important to me, but the test conditions for the former were a piece of cake compared to the latter in terms of the overall intensity of the experience.

Then there is the question of CFA ethics principles. They used to comprise some 20% of the curriculum back in my days. Those were (and presumably still are) perfectly good principles. The problem is that they never seem to have really taken hold in the mainstream financial industry even though this was, I assume, CFA Institute’s hope and plan. If anything, I think that over time those principles became even more obscure and effectively unknown outside the CFA circle.

Costs and benefits

Lastly, there is the ever-contentious issue of the annual designation fee (currently USD 299). That’s something I’ve always found somewhat problematic, and, increasingly, I don’t think I’m the only one. The golden rule for any qualification out there – starting with A-levels and ending with a Nobel – is that once you’ve earned it, it’s yours. With the CFA, you never really *own it* proper; you basically just lease it like an SUV or a holiday condo. The fee is usually paid for by the employers, but that’s besides the point, really. If I earned my right to those three letters after my last name, then why exactly do I need to keep paying for them year after year? USD 299 is not nothing…

Only my closest loved ones and I know how much work I put into becoming Wojtek Buczynski, CFA, and how much it cost me (financially and otherwise; mostly otherwise). I am definitely keeping the designation for now for those reasons alone; plus, I think it still benefits me professionally. That being said, I feel quite strongly that what was once extremely valuable has substantially devalued in recent years, thus lowering my ROI. For an investment qualification, I sure was hoping it would go up, or at least hold its value. But as they say: “past performance is not indicative of future returns”.

UK government AI regulation whitepaper review

UK government AI regulation whitepaper review

On 29th March 2023 the UK government published its long-awaited whitepaper setting out its vision for regulating AI in the UK: A pro-innovation approach to AI regulation. It comes amidst generative AI hype, fuelled by spectacular developments of foundation models and solutions built on them (e.g., ChatGPT).

The current boom in generative AI has served as a catalyst for a renewed discourse on AI in financial services, which in recent years has been slightly muted due to the spotlight being mostly on crypto, as well as SPACs, digital operational resilience and new ways of working.

The whitepaper cannot be analysed without reference to another landmark AI regulatory proposal i.e. the EU AI Act. The EU AI Act was proposed in April 2021 and is currently proceeding through the legislative process of the European Union. It is widely expected to be enacted in the second half of 2023 or in early 2024.

The parallels and differences between the UK whitepaper and the EU AI Act

The first observation is that the UK government whitepaper is just that: a list of considerations with an indication of a general “direction of travel”, but nowhere near the level of detail of the EU AI Act (which is not entirely surprising given that the latter is a regulation and the former just a whitepaper). Both laws (the UK one being a draft soft law and the EU one being a draft hard law) recognise that AI will function within a wider set of regulations, both existing (e.g., liability or data protection) and new, AI-specific ones. The UK whitepaper acknowledges the very challenge of defining AI, something the initial draft of EU AI Act caused notable controversy with1.

Both proposals offer general, top-level frameworks with detailed regulation delegated to industry regulators. However, the EU AI Act comes with detailed and explicit risk-weighted rules and prohibitions while the UK act stops shy of making any explicit guidelines in the initial phase.

The approach proposed by the UK government is to create a cross-sector regulatory framework rather than regulate the technology directly. The proposed framework centres around five principles:

- Safety, security, and robustness;

- Transparency and explainability;

- Fairness;

- Accountability and governance;

- Contestability and redress.

These principles are fairly standard, however, for certain systems and in certain contexts, some of these principles may become challenging to observe from the technical perspective. For example, a generative AI text engine may be difficult to query in terms of explaining why specific prompts generated certain outputs. I also note that these principles are high-level, and largely non-technical, whereas the EU AI Act sets out requirements which include similar principles (e.g., transparency), but tangible requirements (e.g., data governance or record keeping).

The most unique aspect of the UK AI whitepaper is that it is targeted to individual sector regulators, rather than users, manufacturers, or suppliers2 of AI directly. The UK approach could be called “regulating the regulators” and is different not just compared to the EU AI Act, but also other soft3 and hard4 laws applicable to AI in financial services – all of which primarily focus on the operators and applications of AI directly.

The UK whitepaper states that it does not initially intend to be binding (“the principles will be issued on a non-statutory basis and implemented by existing regulators”), but anticipates doing so further down the line (“following this initial period of implementation, and when parliamentary time allows, we anticipate introducing a statutory duty on regulators requiring them to have due regard to the principles”). It reads like the principles are expected to “morph” from soft to hard law over time.

Despite being explicitly about AI, the paper remains neutral with respect to specific AI tools or applications such as facial recognition (“regulating the use, not the technology”). It is one of very few regulatory papers to bring up the issue of AI autonomy up, which I think is very interesting.

In an uncharacteristically candid admission, the whitepaper notes that “It is not yet clear how responsibility and liability for demonstrating compliance with the AI regulatory principles will be or should ideally be, allocated to existing supply chain actors within the AI life cycle. […] However, to further our understanding of this topic we will engage a range of experts, including technicians and lawyers.”. In October 2020 the EU published a resolution on civil liability for AI5. The resolution advised strict liability for the operators of high-risk AI systems and fault-based liability for operators of all other AI systems. It is important to note that the definitions of “high-risk AI systems” as well as “operators” in the resolution are not the same as those proposed in the subsequently published draft of the EU AI Act, which may lead to ambiguities. Furthermore, European Parliament resolutions are not legally binding, so on one hand we have the UK admitting that liability, as a complex matter, requires further research, and the EU proposing simple approach which may be somewhat *too* simple for a complex, multi-actor AI value chain.

In another candid reflection, the UK government recognises that “[they] identified potential capability gaps among many, but not all, regulators, primarily in relation to: AI expertise [and] organisational capacity”. It remains to be seen how UK regulators (particularly financial and finance-adjacent ones, i.e., PRA, FCA, ICO) respond to these comments, particularly considering shortage of AI talent and heavy competition from tech firms and financials.

Conclusions of the whitepaper are consistent with observation within the FS industry – that while there is reasonably good awareness of high-level laws and regulations applicable to AI (MIFID II, GDPR, Equality Act 2010), there is a lack of regulatory framework to connect them. There is also a perception of regulatory gaps which upcoming AI regulations are expected to bridge.

There are both similarities and differences between the UK government whitepaper and the EU AI Act. The latter is much more detailed and fleshed out, putting EU ahead of the UK in terms of legislative developments. The EU AI Act is risk-based, focusing on prohibited and high-risk applications of AI. The prohibitions are unambiguous and this part of the Act is arguably rules-based, while the remainder is principles-based. The whole of the EU AI Act is outcomes-focused. The UK govt AI whitepaper explicitly rejects the risk-based approach (which is one of very few parts of the whitepaper that are completely unambiguous) and goes for context-specific, principles-based and outcomes-oriented approach. The rejection of the risk-based approach reads like a clear rebuke of the EU approach.

The main parallel between the UK govt whitepaper and the EU AI Act is sector-neutrality. Both the EU AI Act and the UK govt whitepaper are meant to be applicable to all sectors, with detailed oversight to be delegated to respective sectoral regulators. We also need to be mindful that the primary focus of general regulations is likely to be applications of AI that may impact health, wellbeing, critical infrastructure, fundamental rights etc. Financial services – as important as they are – are not as critical as healthcare or fundamental rights.

Both laws are meant to complement existing regulations, albeit in different ways. The EU AI Act, as a hard law, needs to clearly and unambiguously fit within the matrix of existing regulations across various sectors: partly as a brand new regulation and partly as a complement of existing regulations (e.g. product safety or liability). The UK principles, as a soft law (at least initially) are meant to “complement existing regulation, increase clarity, and reduce friction for businesses”.

In what appears to be an explicit difference in approaches, the UK whitepaper states that it will “empower existing UK regulators to apply the cross-cutting principles”, and that “creating a new AI-specific, cross-sector regulator would introduce complexity and confusion, undermining and likely conflicting with the work of our existing expert regulators.”. This stands in contrast with the proposed EU approach, which – despite being based on existing regulators – also anticipates creation of a pan-EU European Artificial Intelligence Board (EAIB) tasked with a number of responsibilities pertaining to the implementation, requirements, advice and enforcement of the EU AI Act.

However, the UK government proposes the introduction of some “central functions” to ensure regulatory coordination and coherence. The functions are:

- Monitoring, assessment and feedback;

- Support coherent implementation of the principles;

- Cross-sectoral risk assessment;

- Support for innovators (including testbeds and sandboxes);

- Education and awareness;

- Horizon scanning;

- Ensuring interoperability with international regulatory frameworks.

Even though the whitepaper does not offer details about the logistics of these central functions, they do appear similar in principle to what the EAIB would be tasked with. It does note that the central functions would initially be delivered by the government, with an option to deliver them independently in the long run. The whitepaper references UK’s Digital Regulation Cooperation Forum (DRCF) – comprising the Competition and Markets Authority (CMA), Ofcom, Information Commissioner’s Office, and the Financial Conduct Authority (FCA) – as an avenue for delivery.

The fundamental difference between the UK and EU approaches is enforceability: the UK whitepaper is (at least initially) a soft law, and the EU AI Act will be a hard law. However, it is reasonable for a regulator to expect that their guidances be followed and to challenge regulated firms if they’re not, which means that a soft law is de facto almost a hard law. The EU AI Act has explicit provisions for monetary fines for non-compliance (up to EUR 30,000,000 or 6% of the global annual turnover (whichever is higher)6, which is even stricter than GDPR’s EUR 20,000,000 or 4% of annual global turnover (whichever is higher)) – the UK AI whitepaper has none. It is entirely plausible that when the UK whitepaper evolves from soft to hard law, additional provisions will be made for monetary fines, but as it stands right now, this aspect is missing.

Conclusion

Overall, it is somewhat challenging to provide a clear (re)view of the whitepaper. It has a lot of interesting recommendations, although most, if not all, have appeared in previous AI regulatory guidances worldwide and have otherwise been tried and tested. The whitepaper’s focus on regulators rather than end-users / operators of AI is both original and very interesting.

The time for AI regulation has come. I expect a lot of activity and discussions around it in the EU, UK, and beyond. I also expect the underlying values and principles to be relatively consistent worldwide, but – as the top-level comparison of the UK and EU proposals has shown – similar principles can be promoted, implemented and enforced within substantially different regulatory frameworks.

________________________________________________________

(1) https://www.wired.com/story/artificial-intelligence-regulation-european-union/

(2) The EU AI Act introduces a catch-all concept of “operator” which applies to all the actors in the AI supply chain.

(3) European Commission, ‘White Paper on Artificial Intelligence: A European Approach to Excellence and Trust’, 2020; European Commission, ‘Report on the Safety and Liability Implications of Artificial Intelligence, the Internet of Things and Robotics’, 2020; European Commission, ‘Liability for Artificial Intelligence and Other Emerging Digital Technologies’, 2019; The Ministry of Economy, Trade and Industry (‘METI’), ‘Governance Guidelines for Implementation of AI Principles ver. 1.0’, 2021; Hong Kong Monetary Authority (‘HKMA’), ‘High-Level Principles on Artificial Intelligence’, 2019; The International Organisation of Securities Commissions (‘IOSCO’), ‘The Use of Artificial Intelligence and Machine Learning by Market Intermediaries and Asset Managers’, https://www.iosco.org/library/pubdocs/pdf/IOSCOPD658.pdf ; Financial Stability Board, note 9 above; Bundesanstalt für Finanzdienstleistungsaufsicht (‘BaFin’), ‘Big Data Meets Artificial Intelligence – Results of the Consultation on BaFin’s Report’, 21 March 2019, https://www.bafin.de/SharedDocs/Veroeffentlichungen/EN/BaFinPerspektiven/2019_01/bp_19-1_Beitrag_SR3_en.html ; Information Commissioner’s Office, ‘Guidance on the AI Auditing Framework Draft Guidance for Consultation’, 2020; Information Commissioner’s Office, ‘Explaining Decisions Made with AI’, 2020; Personal Data Protection Commission, ‘Model Artificial Intelligence Governance Framework (2nd ed)’, Singapore, 2020.

(4) Directive 2014/65/EU of the European Parliament and of the Council of 15 May 2014 on Markets in Financial Instruments and Amending Directive 2002/92/EC and Directive 2011/61/EU; European Commission, ‘RTS 6’, 2016; Financial Conduct Authority, ‘The Senior Managers and Certification Regime: Guide for FCA Solo-Regulated Firms’ (‘SM&CR’), July 2019, https://www.fca.org.uk/publication/policy/guide-for-fca-solo-regulated-firms.pdf ; Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data (General Data Protection Regulation), 27 April 2016, https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679&from=EN; European Parliament, ‘Proposal for an Artificial Intelligence Act’, 2021; People’s Bank of China, ‘Guiding Opinions of the PBOC, the China Banking and Insurance Regulatory Commission, the China Securities Regulatory Commission, and the State Administration of Foreign Exchange on Regulating the Asset Management Business of Financial Institutions’, 27 April 2018.

(5) European Parliament resolution of 20 October 2020 with recommendations to the Commission on a civil liability regime for artificial intelligence (2020/2014(INL))

(6) Interestingly, poor data governance could trigger the same fine as engaging with outright prohibited practices.

Neal Stephenson “Fall, or Dodge in hell” book review

Neal Stephenson “Fall, or Dodge in hell” book review

Joseph Conrad’s “Heart of darkness” is widely regarded as one of the masterpieces of 20th century literature (even though it was technically written in 1899). It directly inspired one cinematic masterpiece (Francis Ford Coppola’s 1979 “Apocalypse now”) and was allegedly an inspiration for another masterpiece-adjacent one (James Gray’s 2019 “Ad Astra”). I personally consider it to be rather hollow, and thus allowing a talented artist to use it as a canvas to draw their own, unique vision on rather than a masterpiece in its own right, but I’m not going to deny its impact and relevance.

Moreover, Conrad managed to contain the complete novella within 65 pages. Aldous Huxley fit “Brave new world” into 249 pages. J. D. Salinger fit “Catcher in the rye” in 198 pages, while George Orwell’s “1984” is approx. 350 pages long (and yes, page count depends on the edition, *obviously*). I seriously worry that in the current environment of “serially-serialised” novels these self-contained masterpieces would struggle to find a publisher. This, plus we’re also seeing true “bigorexia” in literature: novels have been exploding in size in recent years, and probably even more so in the broadly defined sci-fi / fantasy genre. Back when I was a kid, a book over 300 pages was considered long, and “bricks” like Isaac Asimov’s “Foundation” or Frank Herbert’s “Dune” were outliers. Nowadays no one bats an eyelid at 500 or 800 pages.

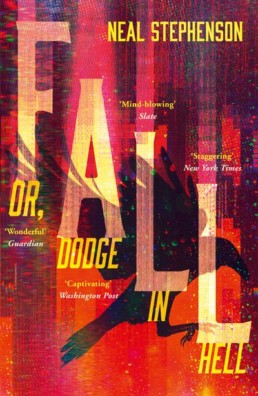

And this is where Neal Stephenson’s “Fall, or Dodge in hell” comes in. At 883 pages it’s a lot to read through, and I probably wouldn’t have picked it up had it not been referenced by two academics I greatly respect, professors Frank Pasquale and Steve Fuller in their discussion some time ago. I previously read Stephenson’s “Snow crash” as a kid and I was pretty neutral about it: it was an OK cyberpunk novel, but it failed to captivate me; I stuck to Gibson. Still, with such strong recommendations I was more than happy to check “Fall” out.

I will try to keep things relatively spoiler-free. In a nutshell, we have a middle-aged, more-money-than-God tech entrepreneur (the titular Dodge) who dies during a routine medical. His friends and family are executors of his last will, which orders them to have Dodge’s mind digitally copied and uploaded into a digital realm referred to as Bitworld (as opposed to Meatspace, i.e. the real, physical world – btw if you’re thinking “that’s not very subtle”; well, nothing in “Fall” is; subtlety or subtext are most definitely *not* the name of the game here). It takes hundreds of (mostly unnecessary) pages to even get to that point, but, frankly, that part is the book’s only saving grace, because Stephenson hits on something real, which I think usually gets overlooked in the mind uploading discourse: what will it feel to be a disembodied brain in a completely alien, unrelatable, sensory stimuli-deprived environment? This is the one part (only part…) of the novel where Stephenson’s writing is elevated, and we can feel and empathise with the utter chaos and confusion of Dodge’s condition. There was a very, very interesting discussion on a related topic in a recent MIT Technology Review article titled “This is how your brain makes your mind” by psychology professor Lisa Feldman Barrett, which reads “consider what would happen if you didn’t have a body. A brain born in a vat would have no bodily systems to regulate. It would have no bodily sensations to make sense of. It could not construct value or affect. A disembodied brain would therefore not have a mind”. Stephenson’s Dodge is not in the exact predicament Prof. Barrett is describing (his brain wasn’t born in a vat), but given he has no direct memory of his pre-upload experience, it is effectively identical.

One last semi-saving grace is Stephenson’s extrapolated-to-the-extreme a vision of information bubbles and tribes. His America is divided so extremely along the lines of (dis)belief and (mis)information filtering that it is effectively a federation of passively hostile states rather than anything resembling united states. That scenario seems to be literally playing out in front of our eyes.

Unfortunately, Stephenson quickly runs out of intellectual firepower (even though he is most definitely a super-smart guy – after all, he invented the metaverse a quarter of a century before Mark Zuckerberg brought it into vogue) and after a handful of truly original and thought-provoking pages we find ourselves in something between the digital Old Testament and the Medieval, where all the uploaded (“digitally reincarnated”) minds begin to participate in an agrarian feudal society, falling into all the same traps and making all the same mistakes mankind did centuries ago; a sci-fi novel turns fantasy. It’s all very heavy-handed, unfortunately; it feels like Stephenson was paid by the page and not by the book, so he inflated it beyond any reason. If there is any moral or lesson to be taken away from the novel, it escaped me. It feels like the author at one point realised that he cannot take the story much further, or, possibly, just got bored with it and decided to wrap it up.

“Fall” is a paradoxical novel in my eyes: on one hand the meditation on the disembodied, desperately alone a brain is fascinating from the Transhumanist perspective; on the other I honestly cannot recall the last time I read a novel so poorly written. It’s just bad literature, pure and simple – which is particularly upsetting because it is a common offence in sci-fi: bold ideas, bad writing. I have read so many sci-fi books where amazing ideas were poorly written up, and I have a real chip on my shoulder about it, as I suspect that sci-fi literature’s second-class citizen status in the literary world (at least as I have perceived it all my life, perhaps wrongly) might be down to its literary qualities. The one novel that comes to mind as a comparator volume-wise and author’s clout-wise is Neil Gaiman’s “American gods”, and you really need to look no further to see, glaringly, the difference between quality and not-so-quality literature within broadly-defined sci-fi and fantasy genre: Gaiman’s writing is full of wonder with moments of genuine brilliance (Shadow’s experience being tied to a tree) whereas Stephenson’s is heavy, uninspired, and tired.

Against my better judgement, I read the novel through to the end (“if you haven’t read the book back-to-back, then it doesn’t count!” shouts my inner saboteur). Is “Fall” worth the time it takes to go through its 883 pages? No; sadly, it is not. You could read 2 – 3 other, much better books in the time it takes to go through it, and – unlike in that true-life story – there is no grand prize at the end.

What are the lessons to be taken away from 5 months’ worth of wasted evenings? Two, in my view:

Writing a quality novel is tough, but coming up with a quality, non-WTF ending is tougher; that is where so many fail (including Stephenson – spectacularly);

If a book isn’t working for you, just put it down. Sure, it may have a come to Jesus revelatory ending, but… how likely is that? Bad novels are usually followed by even worse endings.

_______________________________________________________

(1) Another case in point: Thomas Harris’ Hannibal Lecter series of novels – it’s mediocre literature at best, but it allowed a number of very talented artists to develop fascinating, charismatic characters and compelling stories, both in cinema and on TV.

(2) In my personal view, both novels are on the edge of fantasy and slipstream, but I appreciate that not everyone will agree with this one.

Studio Irma, “Reflecting forward” at the Moco Museum

Studio Irma, “Reflecting forward” at the Moco Museum Amsterdam, Nov-2021.

“Modern art” is a challenging term because it can mean just about anything. One wonders whether in a century or two (assuming humankind makes it this long, which is a pretty brave assumption considering) present day’s modern art will still be considered modern, or will it have become regular art by then.

I’m no art buff by any stretch of imagination, but certain works and artists speak to me and move me deeply – and they are very rarely old portraits or landscapes (J. W. M. Turner being one of the rare exceptions, his body of work is something else entirely). One of the more recent examples has been 2020 – 2021 Lux digital and immersive art showcase at 180 Studios in London (I expect Yayoi Kusama’s infinity rooms, also at Tate Modern, to make a similarly strong impression on me – assuming I’d be able to see them, as this exhibition has been completely sold out for many months now). I seem to respond particularly strongly to immersive, vividly colourful experiences, so it was no surprise that Irma Studio’s “Reflecting forward” infinity rooms project at the Moco Museum (“Moco” standing for “modern / contemporary”) caught my eye during a recent trip to the Netherlands.

I knew nothing of Studio Irma, “Reflecting forward”, or Moco prior to my arrival to Amsterdam; I literally Googled “Amsterdam museums” the day before; Rijksmuseum was a no-brainer because of the “Night watch”, while Van Gogh Museum was a relatively easy pass, but I was hungry for something original and interesting, something exciting and immersive – and then I came across “Reflecting forward” at the Moco. Moco Museum is fascinating in its own right – a two-storey townhouse of a very modest size by museum standards, putting up a double David vs. Goliath sandwiched between massive Van Gogh and Rijks museums in Amsterdam’s museum district.

Studio Irma is a brainchild of Dutch modern artist Irma de Vries, who specialises in video mapping, augmented reality, immersive experiences, and other emerging technologies. “Reflecting forward” falls into this theme: it consists of 4 infinity rooms (“We all live in bubbles”, “Kaleidoscope”, “Diamond matrix”, “Connect the dots & universe”) filled mostly with vivid, semi-psychedelic video projections and sounds, delivering a powerful, amazingly immersive, dreamlike experience.

To the best of my knowledge, there is no one, universal definition of an infinity room. It is usually a light and / or visual installation in a room covered with mirrors (usually including the floor and the ceiling), which gives the effect of infinite space and perspective (people with less-than-perfect depth perception, such as myself, may end up bumping into the walls). Done right, the result can be phenomenally immersive, arresting, and just great fun. They are probably easier to show than to explain. World’s best-known infinity rooms have been created by Yayoi Kusama, but, as per above, I am yet to experience those.

“Reflecting forward” occupies the entirety of Moco’s basement (and frankly, is quite easy to miss), which is a brilliant idea, because it blocks off all external stimuli and allows the visitors to fully immerse themselves in Irma’s work. Funny thing: main floors are filled with works by some of modern art’s most accomplished heavyweights (Banksy, Warhol, Basquiat, Keith Haring – even a small installation by Yayoi Kusama), and yet lesser-known Irma completely blows them out of the water.

I don’t know how from a seasoned art critic’s perspective “Reflecting forward” compares to Van Goghs or Rembrandts just a stone’s throw away (or even Warhols and Banksys upstairs at the Moco) in terms of artistic highbrow-ness. I’m sure it’d be an interesting, though ultimately very subjective discussion. Having visited both the Rijksmuseum and the Moco, I was captivated by “Reflecting forward” more than by classical Dutch masterpieces (with the exception of “Night watch”, which is… just mind-blowing).

With “Reflecting forward” Irma is promoting a new art movement, called connectivism, defined as “a theoretical framework for understanding and learning in a digital age. It emphasizes how internet technologies such as web browsers, search engines, and social media contribute to new ways of learning and how we connect with each other. We take this definition offline and into our physical world. through compassion and empathy, we build a shared understanding, in our collective choice to experience art”1.

An immersive experience is only immersive as a full package. Ambience and sound play a critical (even if at times subliminal) play a critical part in the experience. Studio Irma commissioned a bespoke soundscape for “Reflecting forward”, which takes the experience to the whole new level of dreamy-ness. The music is really quite hypnotic. The artist stated her intention to release it on Spotify, but as of the time of writing, it is not there yet.

I believe there is also an augmented reality (AR) app allowing us to take the “Reflecting forward” outside, developed with socially-distanced, pandemic-era art experience in mind, but I couldn’t find the mention of it on Moco’s website, and I haven’t tried it.

Overall, for the time I stayed in Moco’s basement, “Reflecting forward” has transported me to beautiful, peaceful, and hypnotic places, giving me an “out of time” experience. Moco deserves huge kudos for giving the project the space it needed and allowing the artist to fully realise her vision. Irma de Vries’ talent and imagination shine in “Reflecting forward”, and I hope to experience her work in the future.

______________________________________

BCS: AI for Governance and Governance of AI (20-Jan-2021)

BCS: AI for Governance and Governance of AI (20-Jan-2021)

BCS, The Chartered Institute for IT (more commonly known simply as the British Computer Society) strikes me as *the* ultimate underdog in the landscape of British educational and science societies. It’s an entirely subjective view, but entities like The Royal Institution, The Royal Society, British Academy, The Turing Institute, British Library are essentially household names in the UK. They have pedigree, they are venerated, they are super-exclusive, money-can’t-buy brands – and rightly so. Even enthusiasts-led British Interplanetary Society (one of my beloved institutions) is enjoying well-earned respect in both scientific and entrepreneurial circles, while BCS strikes me as being sort of taken for granted and somewhat invisible outside the professional circles, which is a shame. I first came across BCS by chance a couple of years ago, and have since added it to my personal “Tier 1” of science events.

Financial services are probably *the* number one non-tech industry most frequently appearing in BCS presentations, which isn’t surprising given the amount of crossover between finance and tech (“we are a tech company with a banking license” sort of thing).

One things BCS does exceptionally well is interdisciplinarity, which is something I am personally very passionate about. In their many events BCS goes well above and beyond technology and discusses topics such as environment (their event on the climate aspects of cloud computing was *amazing*!), diversity and inclusion, crime, society, even history (their 20-May-2021 event on the historiography of the history of computing was very narrowly beaten by receiving my first Covid jab as the best thing to have happened to me on that day). Another thing BCS does exceedingly well is balance general public-level accessibility with really specialist and in-depth content (something RI and BIS also do very well, while, in my personal opinion, RS or Turing… not so well). BCS has a major advantage of “strength in numbers”, because their membership is so broad that they can recruit specialist speakers to present on any topic. Their speakers tend to be practitioners (at least in the events I attended), and more often than not they are fascinating individuals, which oftentimes turns a presentation into a treat.

The ”AI for Governance and Governance of AI” event definitely belongs to the “treat” category thanks to the speaker Mike Small, who – with 40+ years of IT experience – definitely knew what he was talking about. He started off with a very brief intro to AI (which, frankly, beats anything I’ve seen online for conciseness and clarity). Moving on to governance, Mike very clearly explained the difference between using AI in governance functions (i.e. mostly Compliance / General Counsel and tangential areas) and governance *of* AI, i.e. the completely new (and in many organisations as-yet-nonexistent) framework for managing AI systems and ensuring their compliant and ethical functioning. While the former is becoming increasingly understood and appreciated conceptually (as for implementation, I would say that varies greatly between organisations), the latter is far behind, as I can attest from observation. The default thinking seems to be that AI is just new another technology, and should be approached purely as a technological means to a (business) end. The challenge is that with its many unique considerations AI (Machine Learning to be exact) can be an opaque means to an end, which is incompatible (if not downright non-compliant) with prudential and / or regulatory expectations. AI is not the first technology which requires an interdisciplinary / holistic governance perspective in the corporate setting, because cloud outsourcing has been an increasingly tightly regulated technology in financial services since around 2018.

The eight unique AI governance challenges singled out by Mike are:

- Explainability;

- Data privacy;

- Data bias;

- Lifecycle management (aka model or algorithm management);

- Culture and ethics;

- Human involvement;

- Adversarial attacks;

- Internal risk management (this one, in my view, may not necessarily belong here, as risk management is a function, not a risk per se).

The list, as well as a comparison of global AI frameworks that followed, were what really triggered me in the presentation (in the positive way) because of their themes-based approach to governance of AI (which happens to be one of my academic research interests). The list of AI regulatory guidances, best practices, consultations, and most recently regulations proper has been growing rapidly for at least 2 years now (and that is excluding ethics, where guidances started a couple of years earlier, and currently count a much higher number than anything regulation-related). Some of them come from government bodies (e.g. the European Commission), other from regulators (e.g. the ICO), other from industry associations (e.g. IOSCO). I reviewed many of them, and they all contribute meaningful ideas and / or perspectives, but they’re quite difficult to compare side-by-side because of how new and non-standardised the area is. Mike Small is definitely onto something by extracting comparable, broad themes from long and complex guidances. I suspect that the next step will be for policy analysts, academics, the industry, and lastly the regulators themselves to start conducting analyses similar to Mike’s to come up with themes that can be universally agreed upon (for example model management – that strikes me as rather uncontroversial) and those where lines of political / ideological / economic divides are being drawn (e.g. data localisation or handling of personal data in general).

One thing’s for sure: regulatory (be it soft or hard laws) standards for the governance of AI are beginning to emerge, and there are many interesting developments ahead in foreseeable future. It’s better to start paying attention at the early stages than play a painful and expensive game of catch-up in a couple of years.

You can replay the complete presentation here.

Accounting for panic runs: The case of Covid-19 (14-Dec-2020)

Accounting for panic runs: The case of Covid-19 (14-Dec-2020)

Professor Gulnur Muradoglu is someone I hold in high esteem. She used to be my Behavioural Finance lecturer at the establishment once known as Cass Business School in London, back in the day when it was still called Cass Business School. It was my first proper academic encounter with behavioural finance, which led to lifelong interest; professor Muradoglu deserves substantial personal credit for that.

Professor Muradoglu has since moved to the Queen Mary University of London, where she became a director of the Behavaioural Finance Working Group (BFWG). BFWG has been organising fascinating and hugely influential annual conferences for 15 years now, as well as standalone lectures and events. [Personal note: as someone passionate about behavioural finance, I find it quite baffling how the discipline has not (or at least not yet) spilled over to mainstream finance. Granted, some business schools now offer BF as an elective in their Master’s and / or MBA programmes, and concepts such as bias or bubble have gone mainstream, but it’s still a niche area. I was not aware of BF being explicitly incorporated in the investment decision-making process by several of the world’s brand-name asset managers I worked for or with (though, in their defence, concepts such as herding, or asset price bubble are generally obvious to the point of goes-without-saying). Finding a Master’s or PhD programme explicitly focused on behavioural finance is hugely challenging because there are very few universities offering those (Warwick University is one esteemed exception that I’m aware of). Same applies to BF events, which are very few and far between – which is yet another reason to appreciate BFWG’s annual conference.]

The panic runs lecture was an example of BFWG’s standalone events, with new being research jointly by Prof. Muradoglu and her colleague Prof. Arman Eshraghi (co-author of one of my all-time favourite academic articles “hedge funds and unconscious fantasy”) from Cardiff University Business School. Presented nine months after the first Covid lockdown started in the UK, it was the earliest piece of research analysing Covid-19-related events from the behavioural finance perspective I was aware of.

The starting point for Muradoglu and Eshraghi was a viral video of a UK critical care nurse Dawn Bilbrough appealing to general public to stop panic buying after she was unable to get any provisions for herself after a 48-hour-long shift (the video that inspired countless individuals, neighbourhood groups, and businesses to organise food collections for the NHS staff in their local hospitals). They observed that:

- Supermarket runs are unprecedented, although they have parallels with bank runs;

- Supermarket runs are incompatible with the concept of “homo economicus” (rational and narrowly self-interested economic agent).

They argue that viewing individuals as emotional and economic (as opposed to rational and economic) is more compatible with phenomena such as supermarket runs (as well as just about any other aspects of human economic behaviour if I may add). They make a very interesting (and so often overlooked) distinction between risk (a known unknown) and uncertainty (unknown unknown), highlighting humans’ preference for the former over the latter and frame supermarket runs as acts of collective flight from the uncertainty of the Covid-19 situation at the very beginning of the global lockdowns. Muradoglu and Eshraghi strongly advocate in favour of Michel Callon’s ANT (actor-network theory) framework whereby the assumption of atomised agents as the only unit is enhanced to include the network of agents as well. They then overlay it with the “an engine, not a camera” concept from David MacKenzie whereby showing and sharing images of supermarket runs turns from documenting into perpetuating. They also strongly object to labelling the Covid-19 pandemic as either an externality or a black swan, because it is neither (as far as I can recall, there weren’t any attempts to frame Covid-19 as a black swan, because with smaller, contained outbreaks of Ebola, SARS, MERS, N1H1 in recent years it would be impossible to frame Covid as an entirely unforeseeable event – still, it’s good to hear it from experts).

Even though I am not working in the field of economics proper, I do hope that in the 21st century homo economicus is nothing more than a theoretical academic concept. Muradoglu and Eshraghi simply and elegantly prove that in the face of a high-impact adverse event alternative approaches do a much better job of explaining observed reality. I would be most keen for a follow-up presentation where the speakers could propose how these alternative approaches could be used to inform policy or even business practices to mitigate, counteract, or – ideally – prevent behaviours such as panic buying or other irrational group economic behaviours which could lead to adverse outcomes, such as crypto investing (which, in my view, cannot be referred to as “investing” in the de facto absence of an asset with any fundamentals; it is pure speculation).

Somewhat oddly, I haven’t been able to find Muradoglu and Eshraghi’s article online yet (I assume it’s pending publication). You can see the entire presentation recording here.

“Nothing ever really disappears from the Internet” – or does it? (25-Apr-2022)

“Nothing ever really disappears from the Internet” – or does it? (25-Apr-2022)

How many times have you tried looking for that article you wanted to re-read only to find yourselves losing it an hour or two later, unsure as to whether you’ve ever read that article in the first place or whether that has been just a figment of your imagination?

I developed OCD some time in my teen years (which probably isn’t very atypical). One aspect of my OCD is really banal (“have I switched off the oven…?!”; “did I lock the front door…?!”) and easy to manage with the help of a camera-phone. Another aspect of my OCD is compulsive curiosity with a hint of FOMO (“what if that one article I miss is the one that would change my life forever…?!”). With the compulsion to *know* came the compulsion to *remember*, which is where the OCD can sometimes get a bit more… problematic. I don’t just want to read everything – I want to remember everything I read (and where I read it!) too.

When I was a kid, my OCD was mostly relegated to print media and TV. Those of us old enough to remember those “analogue days” can relate to how slow and challenging chasing a stubborn forgotten factoid was (“that redhead actress in one of these awful Jurassic Park sequels… OMG, I can see her face on the poster in my head, I just can’t see the poster close enough to read her name… she was in that action movie with that other blonde actress… what is her ****ing name?!” – that sort of thing).

Then came the Web, followed by smartphones.

Nowadays, with smartphones and Google, finding information online is so easy and immediate that many of us have forgotten how difficult it was a mere 20-something years ago. Moreover, more and more people have simply never experienced this kind of existence at all.

We get more content pushed our way than we could possibly consume: Chrome Discover recommendations; every single article in your social media feeds; all the newsletters you receive and promise yourself to start reading one day (spoiler alert: you won’t; same goes for that copy of Karl Marx’s “Capital” on your bookshelf); all the articles published daily by your go-to news source; etc. Most people read whatever they choose to read, close the tab, and move on. Some remember what they’ve read very well, some vaguely, and over time we forget most of it (OK, so it probably is retained on some level [like these very interesting articles claim 1,2], gradually building up our selves much like tiny corals build up a huge coral reef, but hardly anyone can recall titles and topics of the articles they read days, let alone years prior).

A handful of the articles we read will resonate with us really strongly over time. Some of us (not very many, I’m assuming) will Evernote or OneNote them as their personal, local-copy notes; some will bookmark them; and some will just assume they will be able to find these articles online with ease. My question to you is this: how many times have you tried looking for that article you wanted to re-read only to find yourselves losing it an hour or two later, unsure as to whether you’ve ever read that article in the first place or whether that has been just a figment of your imagination? (if your answer is “never”, you can probably skip the rest of this post). It happened to me so many, many times that I started worrying that perhaps something *is* wrong with my head.

And Web pages are not even the whole story. Social media content is even more “locked” within the respective apps. Most (if not all) of them can technically be accessed via the Web and thus archived, but this rarely works in practice. It works for LinkedIn, because LI was developed as browser-first application, and it’s generally built around posts and articles, which lend themselves to browser viewing and saving. Facebook was technically developed as browser-first, but good luck saving or clipping anything. FB is still better than Instagram, because with FB we can still save some of the images, whereas with Instagram that option is practically a non-starter. Insta wants us to save our favourites within the app, thus keeping it fully under its (i.e. Meta’s) control. That means that favourited images can at any one time be removed by the posters, or by Instagram admins, without us ever knowing. I don’t know how things work with Snap, TikTok, and other social media apps, because I don’t use them, but I suspect that the general principle is similar: content isn’t easily “saveable” outside the app.

Then there are ebooks, which are never fully offline the way paper books are. The Atlantic article 3 highlights this with a hilarious example of the word “kindle” being replaced with “Nook” in a Nook e-reader edition of… War and Peace.

Then came Iza Kaminska’s 2017 FT article “The digital rot that threatens our collective memory” and I realised that maybe nothing’s wrong with my head, and that maybe it’s not just me. While Iza’s article triggered me, its follow-up in The Atlantic nearly four years later (“The Internet is rotting”) super-triggered me. The part “We found that 50 percent of the links embedded in Court opinions since 1996, when the first hyperlink was used, no longer worked. And 75 percent of the links in the Harvard Law Review no longer worked” felt like a long-overdue vindication of my memory (and sanity). It really wasn’t me – it was the Internet.

It really boggles the mind: in just 2 – 3 decades the Web has replaced libraries and physical archives as humankind’s primary source of reference information (and arguably fiction as well, though paper books are still holding reasonably strong for now). The Internet is comprised of websites, and websites are comprised of posts and articles – all of which have a unique reference in the form of the URL. I can’t talk for other people, but I have always implicitly assumed that information lived pretty much indefinitely online. On the whole it may be the case (the reef so to speak), but that does not hold for specific pages (individual corals) anywhere near as much as I had assumed. There is, of course, the Internet Archive’s Wayback Machine, a brilliant concept and a somewhat unsung hero of the Web – but WM is far from solid (example: I found a dead link [ http://www.psychiatry.cam.ac.uk/blog/2013/07/18/bad-moves-how-decision-making-goes-wrong-and-the-ethics-of-smart-drugs/ ] in one of my blog posts [“Royal Institution: How to Change Your Mind”]. The missing resource was posted on one of the websites within the domain of Cambridge University, which indicates it is high-quality, valuable, meaningful content – and yet the Wayback Machine has not archived it. This is not a criticism of WM nor its parent the Internet Archive – what they do deserves the highest praise; it’s more about recognising the challenges and limitations it’s facing).

So, with my sanity vindicated, but my OCD and FOMO being as voracious as ever – where do we go from here?

There is the local copy / offline option with Evernote, OneNote, and similar Web clippers. I have been using Evernote for years now (this is not an endorsement), and, frankly, it’s far from perfect, particularly on a mobile (I said this was not an endorsement…) – but everything else I have come across has been even worse; and, frankly, there are surprisingly few players in that niche. Still, Evernote is the best I could find for all-in-one article clipping and saving – it’s either this or “save article as”, which is a bit too… 90’s. Then there is the old-fashioned direct e-mail newsletter approach, which, as proven by Substack, can still be very popular. I’m old enough to remember the life pre-cloud (or even pre-Web, albeit very faintly, and only because the Web arrived in Poland years after it arrived in some of the more developed Western countries) – C:\ drive, 1.44Mb floppy disks, and all that – and that, excuse the cheap pun, clouds my judgement: I embrace the cloud and I love the cloud, but I want to have a local, physical copy of my news archive. However, as Drew Justin rightly points out in his Wired article “As with any public good, the solution to this problem [the deterioration of the infrastructure for publicly available knowledge] should not be a multitude of private data silos, only searchable by their individual owners, but an archive that is organized coherently so that anyone can reliably find what they need”; me updating my personal archive in Evernote (at the cost of about 2hrs of admin per week) is a crutch, an external memory device for my brain. It does nothing for the global, pervasive link rot. Plus, Evernotes of this world don’t work with social media apps very well. There are dedicated content clippers / extractors for different social media apps, but they’re usually a bit cumbersome, and don’t really “liberate” the “locked” content in any meaningful way.

I see two ways of approaching this challenge:

- Beefing up the Internet Archive, the International Internet Preservation Consortium, and similar institutions to enable duplication and storage of as wide a swath of Internet as possible, as frequently as possible. That would require massive financial outlays and overcoming some regulatory challenges (non-indexing requests, the right to be forgotten, GDPR and GDPR-like regulations worldwide). Content locked in-app would likely pose a legal challenge to duplicate and store if the app sees itself as the owner of all the content (even if it was generated by the users).

- Accepting the link rot as inevitable and just letting go. It may sound counterintuitive, but humankind has lost countless historical records and works of art from the sixty or so centuries of the pre-Internet era and somehow managed to carry on anyway. I’m guessing that our ancestors may have seen this as inevitable – so perhaps we should too?

I wonder… is this ultimately about our relationship with our past? Perhaps trying to preserve something indefinitely is unnatural, and we should focus on the current and the new rather than obsess over archiving the old? This certainly doesn’t agree with me, but perhaps I’m the one who’s wrong here…

________________________________________________

1https://www.wired.co.uk/article/forget-idea-we-forget-anything

Ron Kalifa 2021 UK Fintech Review

Having delivered the 2020 UK budget, the Chancellor of the Exchequer Rishi Sunak1 commissioned / appointed a seasoned banking / FinTech executive Ron Kalifa OBE to conduct an independent review on the state of UK FinTech industry. The objective of the review was not merely an assessment of the current state of UK FinTech, but also developing a set of recommendations to support its growth, adoption, as well as global position and competitiveness.

On that last point, measuring UK’s (or any other country’s) position in FinTech has always been a combination of science and art. While certain financial metrics are fairly unambiguous and comparable (e.g. number or total value of IPOs, or total value of M&A transactions), the ranking of a given country in FinTech is more nuanced. Should a total value of all FinTech funding / deals be used on absolute basis? Or perhaps adjusted per capita? What about legal and regulatory environment – should it be captured in the score as well?

The abovementioned considerations are usually pondered by researchers in specialist publications or market data companies, while most people in financial services simply work out some sort of internal weighted average of the above, and usually get the Top 3 (the US, the UK, and Singapore) fairly consensually.

Lastly, FinTech industry is unlikely to flourish without world-class educational institutions and a certain entrepreneurial culture. UK universities are among the very best in the world across all disciplines in all global university rankings. The entrepreneurial culture, as an intangible, is challenging to quantify, but most people will agree that London is full of driven, risk-taking, entrepreneurial people from all over the world.

One way or another, UK’s FinTech powerhouse status is indisputable, and the Chancellor’s plan to build on that makes perfect sense. Brexit is the elephant in the room, because by leaving the single market, the UK created a barrier for the free movement of talent where there was none before. Brexit also arguably sent a less-than-positive message to the traditionally globalised / internationalised FinTech industry at large. On the other hand, there is some chance that Brexit could enable UK’s FinTech’s a more global (as opposed to EU-focused) expansion, although this claim can and will only be verified over time.

To his credit, Ron Kalifa approached his review from the “to do list” perspective of actions and recommendations rather than analysis per se. As a result, his document is highly actionable as well as reasonably compact.

The Kalifa Review is broken into five main sections:

- Policy and Regulation – which focuses on creating a new regulatory framework for emerging technologies and ensuring that FinTech becomes an integral part of UK trade policy (interestingly, this part includes a section on regulatory implications of AI as regards the PRA and FCA rules; this is the highest profile official UK publication to address these considerations that I’m aware of. Prior to the Kalifa Review AI regulation in financial services has been discussed at length from the GDPR perspective in ICO’s publications, but those do not directly feed into government policies).

- Skills and Talent – interestingly, the focus here is *not* on competing for Oxbridge / Russell Group graduates nor expansion of finance and technology Bachelor’s and Master’s course portfolio at UK universities. Instead the focus is on retraining and upskilling of existing adult workforce, mostly in the form of low-cost short courses. This aligns with broader civilisational / existential challenges such as the disappearance of “job for life” and the need for lifelong education in the increasingly complex and interconnected world. Separately, Kalifa proposes a new category of UK visa for FinTech talent to ensure UK’s continued access to high-quality international talent, which represents approx. 42% of FinTech workforce.

- Investment – in addition to standard investment incentives such as tax credits, the Kalifa Review recommends improvement of the UK IPO environment as well as creation of a (new?) global family of FinTech indices to give the sector greater visibility (this is an interesting recommendation for anyone who has ever worked for a market data vendor; indices are normally created in response to market demand and there are FinTech indices within existing, established market index families. Creating a new family of indices is something different altogether).

- International Attractiveness and Competitiveness.

- National Connectivity – this point is particularly interesting, as it seems solely focused on countering London-centric thinking and recognising and strengthening other FinTech hubs across the UK.

The Kalifa Review makes a crucial sixth recommendation: to create a new organisational link which the Review proposes to call the Centre for Finance, Innovation, and Technology (CFIT). The Review is slightly vague on how it envisions the CFIT structurally and organisationally (it does mention that it would be a public / private partnership but does not go into further details). CFIT is one recommendation of the Kalifa Review which seems more of a vision than a fully fleshed-out idea, but Ron Kalifa himself spoke about prominently in his live appearances and gave the impression of CFIT being the organisational structure upon which the delivery of his recommendations would largely hinge.

Upon release, the Kalifa Review was met with a great deal of interest from the financial services industry, as well as legal profession, policymakers, business leaders and academics. Ron Kalifa made multiple appearances in different online presentations on the topic, and most brand-name law firms carefully analysed and summarised his report. That, however, was the visible and easier part. The real question is to what extent the recommendations made in the Kalifa Review will be reflected in government policies for years to come.

You can read the report in its entirety here.

__________________________________________

(1) Rishi Sunak took over the job of the Chancellor of the Exchequer from his predecessor, Sajid Javid, less than a month prior to the publication of Budget 2020. Consequently, it can be debated whether the FinTech report was Rishi Sunak’s original idea, or one he inherited.

Frank Pasquale “Human expertise in the age of AI”

Frank Pasquale “Human expertise in the age of AI” Cambridge talk 26-Nov-2020

Frank Pasquale is a Professor of Law at the Brooklyn Law School and is one of the few “brand name” scholars in the nascent field of AI governance and regulation (Lilian Edwards and Luciano Floridi are other names that come to mind).

I had the pleasure of attending his presentation for the Trust and Technology Initiative at the University of Cambridge back in Nov-2020. The presentation was tied to Pasquale’s forthcoming book titled “New Law of Robotics – Defending Human Expertise in the Age of AI”.

Professor Pasquale opened his presentation listing what he describes as “paradigm cases for rapid automation” (areas where AI and / or automation have already made substantial inroads, or are very likely to do so in the near future) such as manufacturing, logistics, agriculture; as well as those I personally disagree with: transport, mining (1). He argues for AI complementing rather than replacing humans as they key to advancing the technology in many disciplines (as well as advancing those disciplines themselves) – a view I fully concur with.

Stifterverband, CC BY 3.0 >, via Wikimedia Commons

He then moves on to the critical – though largely overlooked – distinction between governance *of* artificial intelligence vs. governance *by* artificial intelligence (the latter being obviously more of a concern, whilst the former has until recently been an afterthought or a non-thought). He remarked that the push for researchers to increasingly determine policy is not technical, but political. It prioritises researchers over subject matter experts, which is not necessarily a good thing (n.b. I cannot say I witnessed that push in financial services, but perhaps in other industries it *is* happening?)

Prof. Pasquale identifies three possible ways forward:

- AI developed and used above / instead of domain experts (“meta-expertise”);

- AI and professionals melt into something new (“melting pot”);

- AI and professionals maintain their distinctiveness (“peaceable kingdom”).

In conclusion, Pasquale proposes his own new laws of robotics:

- Complementarity: Intelligence Augmentation (IA) over Artificial Intelligence (AI) in professions;

- Robots and AI should not fake humanity;

- Cooperation: no arms races;

- Attribution of ownership, control, and accountability to humans.

Pasquale’s presentation and views resonated with me strongly because I arrived at similar conclusions not through academic research, but by observation, particularly in financial services and legal services industries. Pasquale is one of the relatively few voices who mitigate some of the (over)enthusiasm regarding how much AI will be able to do for us in very near future (think: fully autonomous vehicles), as well as some of the doom and gloom regarding how badly AI will upend / disrupt our lives (think: “35% of current jobs in the UK are at high risk of computerisation”). I find it very interesting that for a couple of years now we’ve had all kinds of business leaders, thought leaders, consultants etc. express all kinds of extreme visions of the AI-powered future, but hardly any with any sort of common-sense middle-ground views. Despite AI evolving at breakneck speed, it seems that our visions and projections of it evolve slower. The acknowledgment that fully autonomous vehicles are proving more challenging and take longer to develop than anticipated only a few years back has been muted to say the least. Despite the frightening prognoses regarding unemployment, it has actually been at record lows for years now in the UK, even during the pandemic (2)(3). [Speaking from closer proximity professionally, paralegals were one profession that was singled out as being under immediate existential threat from AI – and I am not aware of that materialising in any way. On the contrary, price competition for junior lawyers in the UK has recently reached record heights,(4)(5)].

It is obviously incredibly challenging to keep up with a technology developing faster than just about anything else in the history of mankind – particularly for those of us outside the technology industry. Regulators and policy makers (to a lesser extent also lawyers and legal scholars) have in recent years been somewhat on the backfoot in the face of rapid development of AI and its applications. However, thanks to some fresh perspectives from people like Prof. Pasquale this seems to be turning around. Self-regulation (which, as financial services proved in 2008, sometimes spectacularly fails) and abstract / existential high-level discussions are being replaced with concrete, versatile proposals for policy and regulation, which focus on industries, use cases, and outcomes rather than details and nuances of the underlying technology.

__________________________________________

(1) Plus a unique case of AI in healthcare. While Pasquale adds healthcare to the “rapid paradigm shift” list, the pandemic-era evidence raises doubts over this: https://www.technologyreview.com/2021/07/30/1030329/machine-learning-ai-failed-covid-hospital-diagnosis-pandemic/

(3) https://www.bbc.com/news/business-52660591

(4) https://www.thetimes.co.uk/article/us-law-firms-declare-war-with-140-000-starting-salaries-gl87jdd0q

(5) https://www.ft.com/content/882c9f72-b377-11e9-8cb2-799a3a8cf37b